Introduction

Reproducibility of results is crucial to all scientific disciplines (Giles 2006), a fundamental scientific principle, and a hallmark of cumulative science (Axelrod 1997). The reproducibility of simulation experiments has gained attention with the increasing application of computational methods over the past two decades (Stodden et al. 2016). Simulation models can be verified by reproducing identical or at least similar results. Moreover, replicated models allow to conduct further research on a reliable basis. Still, as in other scientific endeavors (Nosek et al. 2015), independent replications of simulation studies are lacking (Heath et al. 2009; Janssen 2017).

Potential reasons for the shortage of independent model replications are manifold: lacking incentives for researchers, deficient communication of model information, uncertainty in how to validate replicated results, and the inherent difficulty of re-implementing (prototype) models (Fachada et al. 2017)[1]. Agent-based models, moreover, are built on more assumptions than traditional models due to their high degree of disaggregation and bottom-up logic, rendering more difficult the verification and validation of these models (Zhong & Kim 2010). Replication efforts of agent-based models may also lack supporting methods.

This paper shows how replicated simulation models can be used to develop theory, which could increase the incentives to publish replicated work. Both replication and the subsequent theory development are fostered here through the use of simulation standards, such as the ODD (Overview, Design concepts, and Details) protocol and DOE (design of experiments) principles; these standards were not used when the model we replicate was initially developed, presented, and analyzed. For this exercise, we use the agent-based simulation model of organizational routines by Miller et al. (2012), examining the relationship between different types of individual memory and organizational routines. Although 158 publications to date have cited this study, none so far have replicated the model.

We selected this model for our replication study for several further reasons. First, the model is highly original in its approach to address the micro-foundations of organizational routines by modeling agents’ procedural, declarative, and transactive memory,[2] enabling an investigation of the dynamic relationship between individual cognitive properties and both the formation and the performance of organizational routines. Second, it is currently one of the most frequently cited agent-based models of organizational routines.[3] Third, it was published in the reputed Journal of Management Studies, not a typical outlet for agent-based simulation studies. Finally, it has the potential to support further development of theory, and the fact that it did not use simulation standards enables us to demonstrate their potential benefits.

This paper proceeds in three main steps in order to show how a replicated simulation model can be used both to generalize previous results and to refine theory: (1) replicate and verify the model, comparing results with those of Miller et al. (2012);[4] (2) test the usefulness of agent-based modeling standards for replication, such as the ODD protocol and DOE principles; and (3) develop theoretical understanding of the modeled organizational system by extending the simulation experiments on verified grounds.

We successfully reproduce the results of Miller et al. (2012) in the replicated model. The ODD structure helps to systematically extract information from the original model, while DOE principles guide the experimental analysis of the model and enhance interpretability of the results. For example, we clarify one ambiguous model assumption. For theory development, we generalize the scope of the replicated model by investigating how additional scenarios, such as a merger or a volatile environment, affect routine formation and performance, as well as relating previous and new findings to prominent constructs in the literature.

The remainder of this paper is structured as follows. The next section reviews relevant literature concerning replication, simulation standards, and theory development. We then introduce our replication methodology, where we apply the ODD protocol and DOE principles in the context of the simulation model replication. The replicated model is then used to generalize and refine previous theoretical insights. The final section concludes and provides an outlook for further research.

Related Literature

Replication, in general, is considered a cornerstone of good science. The successful replication of results powerfully fosters the credibility of a study. Besides, replications can be used to advance the knowledge in a field, in the sense that the original study design can be extended, generalized, and applied in new domains. Replications allow linking existing and new knowledge (Schmidt 2009) and reflect an ideal of science as an incremental process of cumulative knowledge production that avoids “reinventing the wheel”(Richardson 2017).

Computational models successfully replicated by independent researchers are considered to be more reliable (Sansores & Pavón 2005) and credible (Zhong & Kim 2010). Replications can reveal three types of errors: (1) programming errors; (2) misrepresentations of what was actually simulated; and (3) errors in the analysis of simulation results (Axelrod 1997; Sansores & Pavón 2005). A replication might also reveal hidden, undocumented, or ambiguous assumptions (Miodownik et al. 2010), which can affect the fit of the implemented model with the world to be represented.

The current practice stands in stark contrast to the often-stated importance of replication. Nosek et al. (2015) sparked intense discussion of a potential “replication crisis” in fields as diverse as psychology, economics, and medicine. While much of this discussion concerned empirical areas, replicability and replication also have high relevance for computational modeling (Miłkowski et al. 2018; Monks et al. 2019). Nevertheless, most agent- based models have not been replicated (Heath et al. 2009; Legendi & Gulyas 2012; Rand & Wilensky 2006).[5] Most researchers build new models instead of using existing models (Donkin et al. 2017; Thiele & Grimm 2015), a practice which hampers cumulative and collective learning and raises the costs of modeling (Dawid et al. 2019; Monks et al. 2019). [6] Replicated models can also provide a good starting point for theory development (Lorscheid et al. 2019).

Recently developed standards and guidelines to enable rigorous simulation modeling and model analysis (Grimm et al. 2010; Lorscheid et al. 2012; Rand & Rust 2011; Richiardi et al. 2006) can also support the replication process. Social simulation researchers increasingly acknowledge such standards as the ODD protocol and DOE principles (Hauke et al. 2017). The ODD protocol allows the standardized communication of models (Grimm et al. 2006, Grimm et al. 2010), while DOE principles can foster the systematic analysis and communication of model behavior (Lorscheid et al. 2012; Padhi et al. 2013). Using these standards can help researchers compare simulation models, designs, and results.

Given the cumulative nature of science, replication, ideally supported by these standards, can potentially help to build theory through simulation. Among the many ways to develop theory (see Lorscheid et al. 2019), we focus here on the ideas of Davis et al. (2007),[7] who position the elaboration of simple theories via simulation experiments in a “sweet spot” between theory-creating research, formal modeling, and empirical, theory-testing research. Basic or simple theory[8] typically stems from individual cases or formal modeling; the authors describe it as follows:

By simple theory, we mean undeveloped theory that has only a few constructs and related propositions with modest empirical or analytic grounding such that the propositions are in all likelihood correct but are currently limited by weak conceptualization of constructs, few propositions linking these constructs together, and/or rough underlying theoretical logic. Simple theory also includes basic processes that may be known (e.g., competition, imitation) but that have interactions that are only vaguely understood, if at all. Thus, simple theory contrasts with well-developed theory, such as institutional and transaction cost theories that have multiple and clearly defined theoretical constructs (e.g., normative structures, mimetic diffusion, asset specificity, uncertainty), well-established theoretical propositions that have received extensive empirical grounding, and well-elaborated theoretical logic. Simple theory also contrasts with situations where there is no real theoretical understanding of the phenomena. (Davis et al. 2007, p. 482)

In this spirit, we later contribute to the literature on dynamic capabilities, [9] specifically from the perspective of knowledge integration. Despite a large body of research, the concept of dynamic capabilities has not reached the level of elaboration of other theories in the field of strategic management or organizational science (Helfat & Peteraf 2009; Pisano 2015). This is perhaps because the concept has a longitudinal and processual focus and because empirical data are difficult to obtain; all these factors make simulation particularly useful for theory development (Davis et al. 2007).

In this regard, we posit that simulations can strengthen the formal understanding of knowledge-integrating processes as one potential micro-foundation for dynamic capabilities. To this end, we begin with a replicated model of Miller et al. (2012), who acknowledge their study’s contribution to the literature on dynamic capabilities, and then conduct several additional simulation experiments. We focus on the representation of underlying knowledge structures as a determinant for the effectiveness of dynamic capabilities. We use formal modeling to increase precision, compared to previously used verbal models (Smaldino et al. 2015), in the underlying theoretical logic and the description of the connected constructs. In doing so, we refine the theory of dynamic capabilities by expressing knowledge-integrating processes as a potential mechanism affecting knowledge structures’ underlying routines. Hence, we aim to strengthen the conceptualization of constructs. At the same time, we generalize the concept of knowledge structures in routines’ formation by showing the benefits of this concept in new contexts, such as mergers.

Method

The replication re-implements the conceptual model in a different software and hardware environment to ensure that neither hardware nor software specifics drive results (Miodownik et al. 2010; Wilensky & Rand 2007). Greater differences in the implementation yield stronger verification if the model nevertheless produces the same results.

Table 1 compares the features of the original study and our replication. The replication is performed by independent researchers, which enhances the objectivity. The conceptual model is re-implemented in a different software environment, which allows the detection of coding issues and effects induced by different stochastic algorithms. We chose NetLogo for re-implementation, a widely-used agent-based simulation software package (Hauke et al. 2017; Rand & Rust 2011). A significant difference between the original model implementation and our re-implementation is that we apply the relatively recently established modeling standards of ODD and DOE. This enables us to uncover potential ambiguities hampering a fully conclusive replication process, necessitating the exploration of implicitly made assumptions.

| Dimension | Original study | Replication |

| Year | 2012 (published) | 2020 |

| Authors | Miller, Pentland, Choi | Hauke, Achter, Meyer |

| Simulation sohware | MATLAB 7 | NetLogo 6.0 |

| Model documentation | individual structure | ODD protocol |

| Model analysis | selected experiments | selected experiments + DOE |

The replication aims to reproduce the output pattern of the original model (Grimm et al. 2005) as a criterion of success (Wilensky & Rand 2007). We further evaluate our replication according to the three-tier classification of Axelrod (Axelrod 1997):

- The re-implemented model generates identical results to the original model. Such “numerical identity” is only possible with a model having no stochastic elements or using the same random number generator and seeds.

- The results of the re-implemented model do not statistically deviate from the original; they are “distributionally equivalent,” which is sufficient for most purposes.

- The results of the re-implemented model show “relational equivalence” to the results produced by the original model. This weakest level refers to models with approximately similar internal relationships among their results. For example, output functions may have comparable gradients but deviate statistically (e.g., differing coefficients of determination).

Additional DOE analysis (see Appendix C) allows examination “under the hood” of a simulation result. Opening the typically “black box” of simulation results allows systematic verification and validation, further increasing the credibility of the replication. [10] Based on the replicated model, we perform additional experiments to complement and extend the results of Miller et al. (2012), thereby developing a deeper understanding of routines by analyzing agents’ knowledge base and developing a broader understanding by modeling merging organizations and organizations operating in volatile environments.

Model Description

A condensed model description follows below (for a full description, see the ODD protocol in Appendix A). [11] The model aims to show how cognitive properties of individuals and their distinct forms of memory affect the formation and performance of organizational routines in environments characterized both by stability and by crisis (see also Miller et al. 2012).

Table 2 overviews the model parameters. Agents represent human individuals; together, they form an organization. By default, the organization comprises \(n\) agents. The organization must handle problems that it faces from its environment. A problem consists of a sequence of \(k\) different tasks (Miller et al. 2012).

| Variable | Description | Value (Default) |

| \(n\) | Number of agents in the organization | 10, 30, 50 |

| \(k\) | Number of different tasks in a problem | 10 |

| \(a\) | Task awareness of an agent | 1, 5, 10 |

| \(p_t\) | Probability that an agent updates its transactive memory | 0.25, 0.5, 0.75, 1.00 |

| \(p_d\) | Probability that an agent updates its declarative memory | 0.25, 0.5, 0.75, 1.00 |

| \(w_d\) | Declarative memory capacity of an agent | 1, 25, 50 |

Agents have different skills, though skills themselves are not varied. Each agent has the skill to perform a particular task (Miller et al. 2012). The number of agents equals at least the number of different tasks in a problem, thus ensuring that the organization is always capable of solving a problem. The number of agents can exceed the number of tasks tasks (\(n > k\)), according to the parameter ranges (Miller et al. 2012). The \(k\) different skills are assumed to be distributed uniformly among the agents.[12]

Any agent is aware of a number a of randomly assigned tasks, and each agent is at least aware of the task the agent is skilled for (Miller et al. 2012). Agents can recognize tasks of which they are aware and are blind to unfamiliar tasks (Miller et al. 2012). Each agent is aware of a limited number of tasks in any problem (\(1 \leq a \leq k\)).

Agents have a chance to memorize a subsequent task \(w_d\) in their declarative memory once they have performed a task and handed the problem over to another agent, who then accomplishes the next task. An agent memorizes a task with a certain probability given by the variable \(p_d\). Additionally, agents can memorize the skills of other agents in their transactive memory. The number of agents and their skills which each agent can memorize is limited by the number of agents in the organization. By default, the probability \(p_t\) is 0.5 that an agent will add an entry to transactive memory (Miller et al. 2012).

Agents are distributed across the organization. Scale and distance are not modeled explicitly, but time is crucial. First, operationally, each organizational problem-solving process is time-consuming. Second, strategically, an organization that consecutively solves problems might form routines over time.

Organizations have to perform the tasks in a given order to solve a problem. Once each task is performed, the problem is solved (Miller et al. 2012). The organization copes with several problems over time, whether recurring or changing in terms of the task sequence.

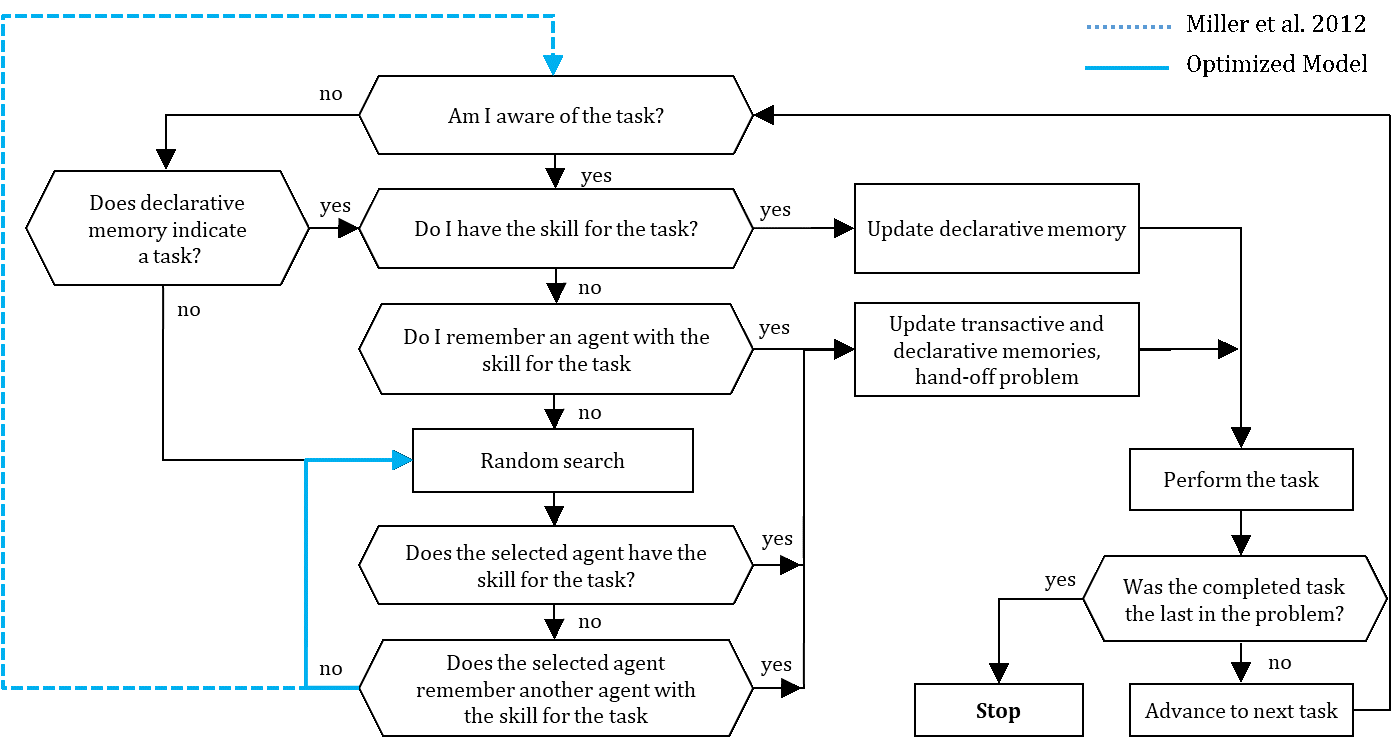

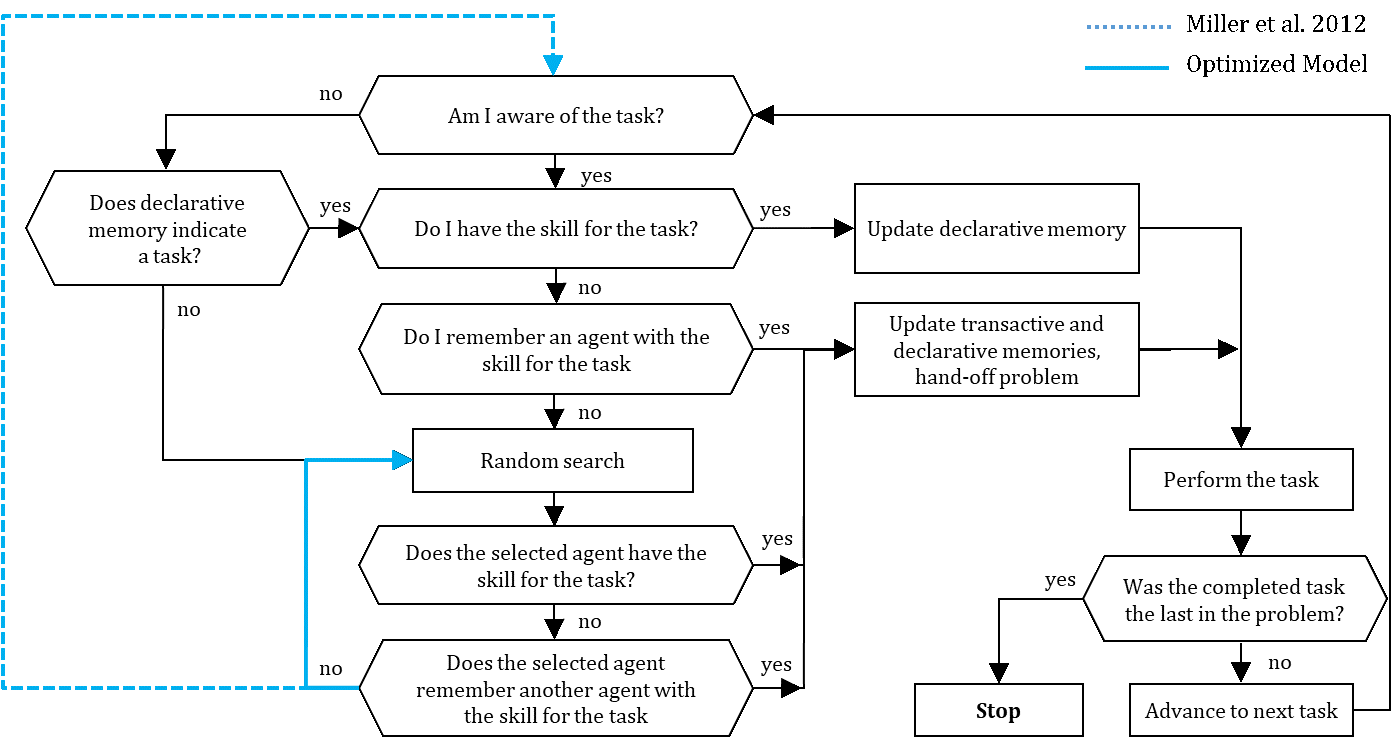

Agents self-organize the problem-solving process (see Figure 1) for given task sequences of the generated problems, except for the first task of each problem, which is always assigned to an agent that is aware of the task and has the required skill. An agent in charge of performing a task in a problem is also responsible for passing the next task in the sequence to another agent. Thus, the agent in charge might remember or must search for another agent that seems capable of handling the next task (Miller et al. 2012). As long as the performed task is not last in the problem sequence, each agent is responsible for advancing the solution by assigning an agent to the next task. Once a problem is solved, a new problem is generated, initiating a new problem-solving process (Miller et al. 2012).

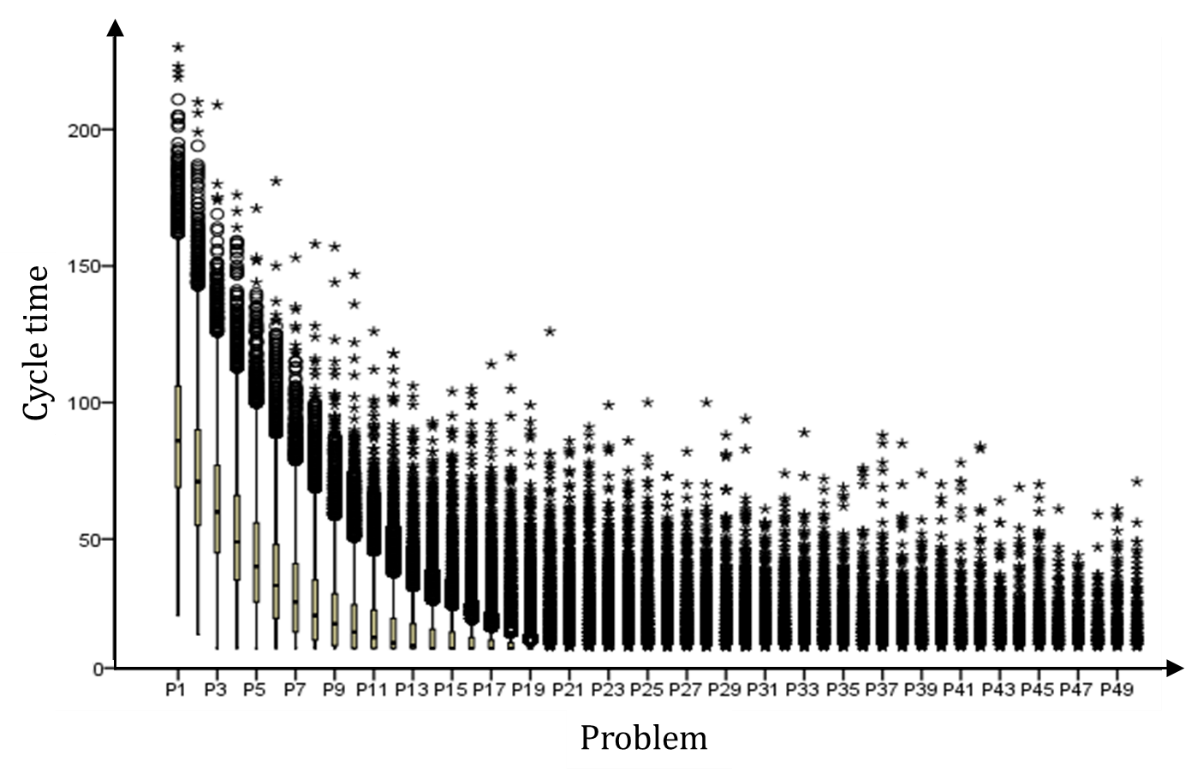

Organizational performance is measured by cycle time, calculated for each problem-solving process. Until a problem is solved, cycle time increases incrementally when agents (\(n\)) perform either necessary (\(n_t\)) or unnecessary (\(u_t\)) tasks and due to search costs (\(s_t\)) caused by unsuccessful random search attempts by agents. An organization achieves minimum cycle time if it only performs necessary tasks and if no search costs occur (Miller et al. 2012). The minimum cycle time equals the number of tasks in a problem.[13]

| $$\it{Cycle\, time}=\sum_{t=1}^{n_t}+\sum_{t=1}^{u_t}+\sum_{t=1}^{s_t}$$ |

Clarification of the Conceptual Model and Critical Reflections on the Design

The ODD protocol enables standardized descriptions of agent-based models with the intent to increase the efficiency of communicating conceptual models and preventing ambiguous model descriptions (Grimm et al. 2006, 2010). In particular, the ODD protocol fosters the clear, comprehensive, and non-overlapping model specifications required to replicate a model.

The ODD protocol can be used to transfer the unstructured, possibly scattered descriptions of a model into a standardized, accessible format for efficient subsequent consultation. A replicating modeler should avoid re-implementing a model from the original code to prevent bias (Wilensky & Rand 2007). Using the explicit intermediate result of the ODD protocol avoids this problem.

Experimental clarification of ambiguous model assumptions

We discovered an unclear assumption from the model description in Miller et al. (2012) when transferring their information into the structure of the ODD protocol. We clarified this ambiguity experimentally, without consulting the original code, to identify the underlying assumptions used in the original paper. The abstract model description also allows for model improvements without violating its original assumptions.

Specifically, Miller et al. (2012, p. 1542) state that the first task of a new problem is assigned at random to an agent that is skilled for this task. Hence, one can conclude that this statement is valid for each problem, although the modeled organization faces recurring problems by default. Another passage on changing problems makes this statement ambiguous, however:

To simulate a one-time exogenous change in the organization’s operating environment, we introduced a permanent change in the problem to be solved. For the 51st problem, the k (=10) tasks were randomly reordered, and the organization faced this new problem repeatedly for the remaining duration of a simulation run (Miller et al. 2012, p. 1548).

This passage suggests that new problems are characterized by reordered task sequences. Hence, one can also conclude that recurring problems are not new problems. This opens two different model assumptions:

- The first task of each problem is assigned to an agent who is skilled in that task.

- Only the first task of a changed problem with reordered task sequence is assigned to an agent who is skilled in that task.

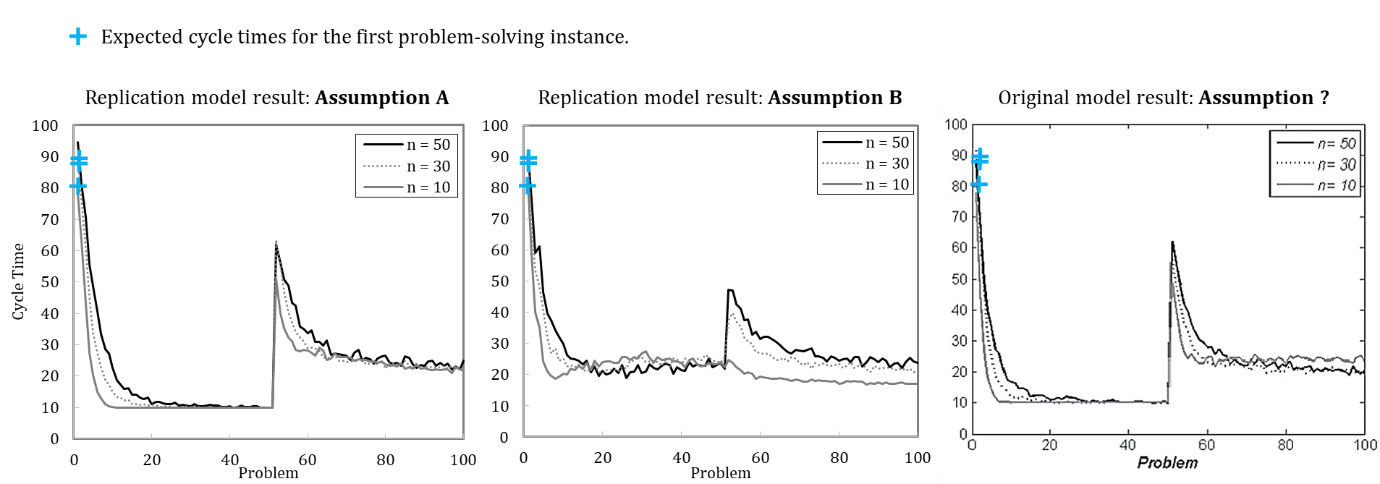

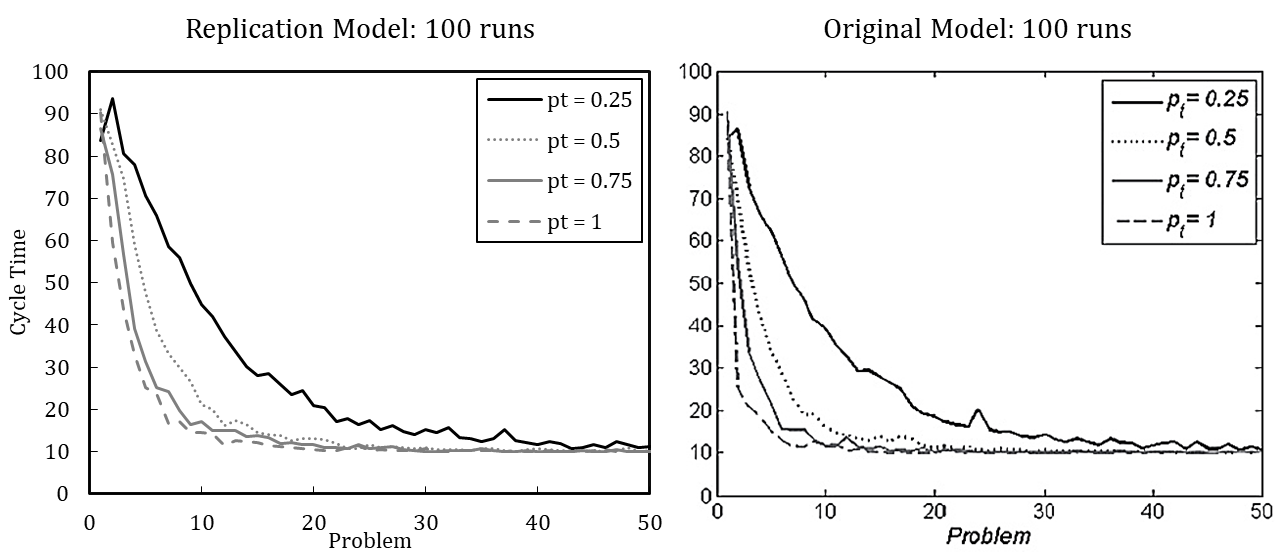

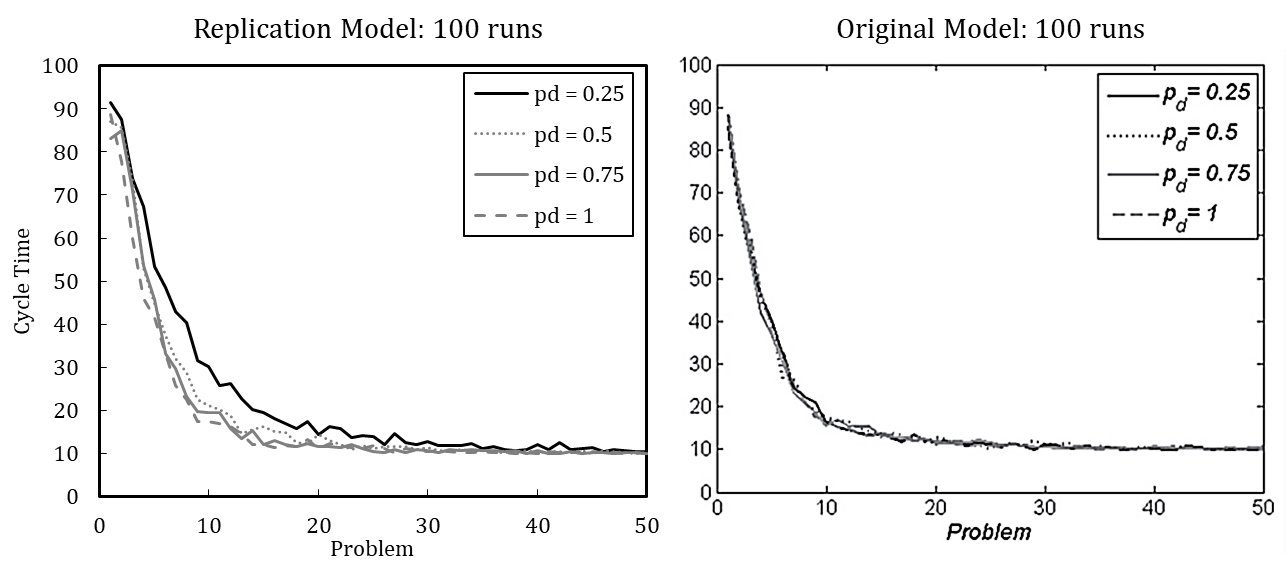

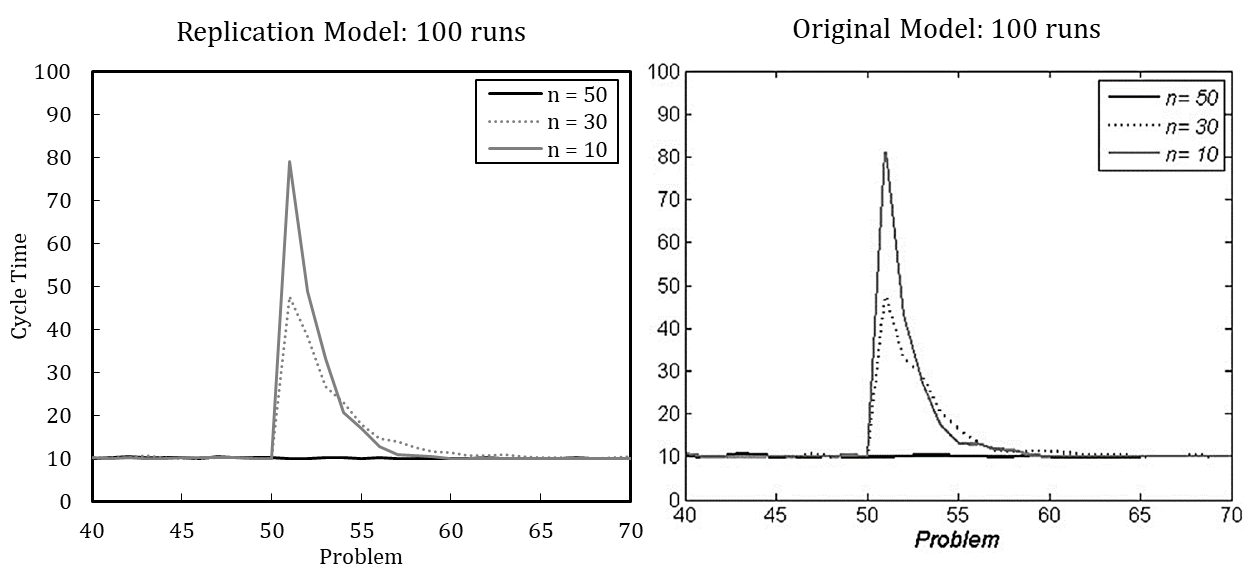

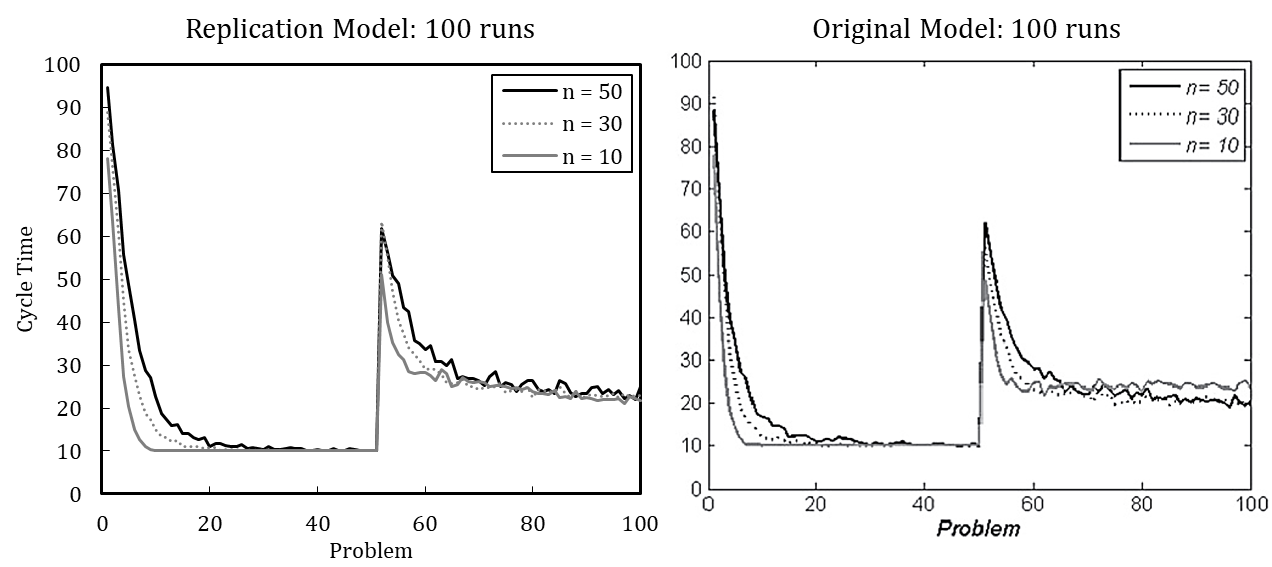

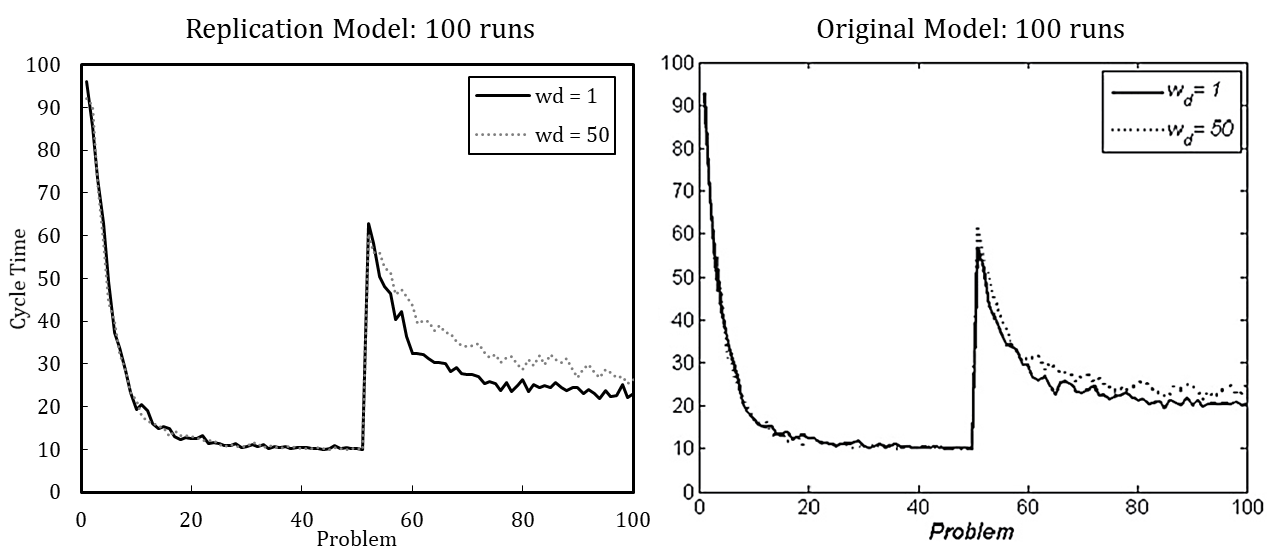

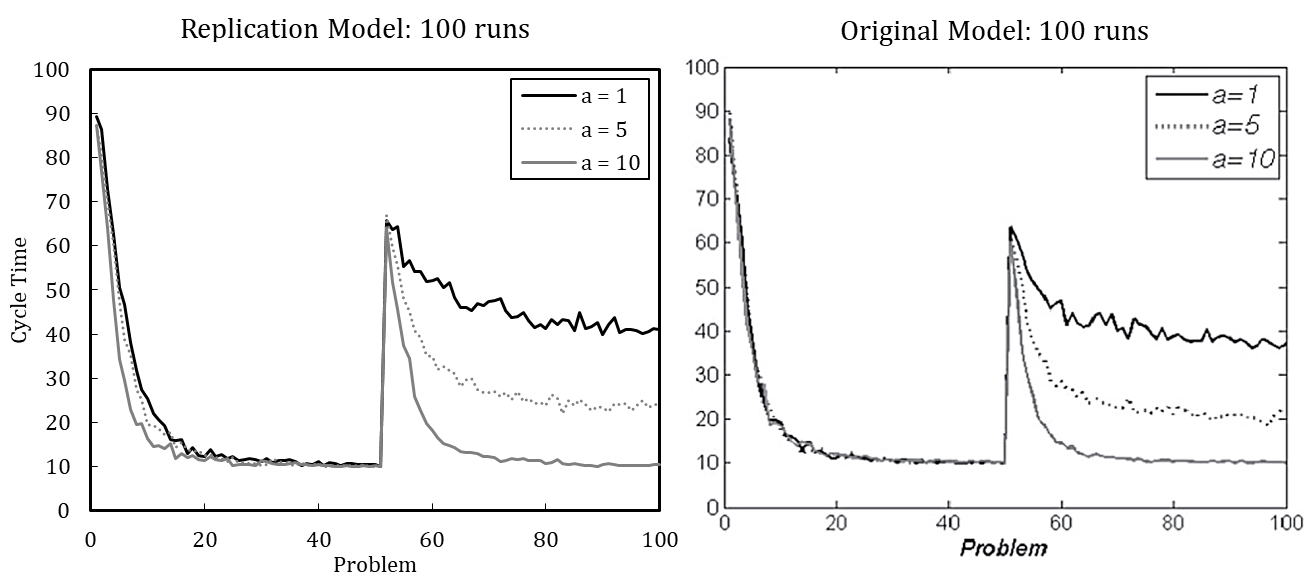

Figure 2 shows the simulation results of the re-implemented model, presuming either (A) or (B). Complementarily, we depict the results of the original model. We use the default parameter setting wherein the update probability of agents’ transactive memory (pt) is varied. The results indicate that the original model used assumption (A), as the resulting pattern better matches the original model.

While a model description in the ODD format cannot protect against all ambiguities, it does make models’ conceptual foundations more explicit. The overall value of the standardized, ODD model description has been comprehensively discussed elsewhere (Grimm et al. 2006); here, we particularly emphasize its value for replication. Our precisely formulated submodel descriptions form a solid basis for writing corresponding functions in the NetLogo code. The model description in the ODD format explicitly expresses the formerly ambiguous assumption (see Appendix A, ODD Protocol, Submodels, problem generation, and task assignment). The final ODD description comprehensively specifies the model in an acknowledged format, which both helps other scholars to understand more precisely the model of Miller et al. (2012) and provides a solid ground for further extensions.

Critical reflections on the conceptual design

Transferring information from the conceptual model into the ODD structure enhanced our understanding of the model, and subsequent pretests revealed two opportunities for improvement.

Figure 1 highlights the first improvement. This modification does not break any model assumptions. In the modified flow chart, an agent searches randomly for agents until one accepts the problem. In the original model, a failed random search attempt results in repetitive scrutiny of the task and consultation of memory. This does not change the agent’s cognitive state, again resulting in a random search.

Second, we argue that random search can be more sophisticated. The original random search is designed as an urn model with replacement. The active agent randomly approaches other agents that might be able to perform the requested task or that can help the searching agent by making a referral to another skilled agent. After an unsuccessful search, the agent again searches randomly among all agents. Hence, the searching agent might approach the same agent again, implying that the searching agent would not remember which agents were approached unsuccessfully before. This assumption is counterintuitive and empirically unlikely. On the one hand, agents in general can remember other agents and their skills. On the other hand, agents do not remember meeting an approached agent during a search attempt. An alternative model design could be tested in which agents are also able to learn from an unsuccessful random search attempt. An alternative urn model without replacement would reduce the search costs and cycle time of a problem-solving process.

Overall, using the ODD protocol helped to define the conceptual model and revealed where the original model description allowed two contradictory assumptions. Furthermore, the ODD structure helped to identify opportunities for model improvements without violating the initial assumptions and highlighted alternative model designs that extend the original model.

Using DOE Principles to Evaluate the Replicated Model

Since the simulation model has stochastic elements, the results reported risk being unrepresentative, which could threaten the reliability of conclusions drawn from the simulation experiments. DOE principles, therefore, demand specification of the required number of runs based on the coefficient of variation for the performed experiments, [14] which allows consideration of stochastically induced variation and thereby enhances the credibility of results.

Our design incorporates low (L), medium (M), and high (H) factor levels, as highlighted in Table 3. These three design points reflect the applied settings to estimate the number of simulation runs needed to produce sufficiently robust results given model properties and stochasticity.

| Design Points | Factors | Representation | ||||

| \(n\) | \(a\) | \(p_t\) | \(p_d\) | \(w_d\) | ||

| L | 10 | 1 | 0.25 | 0.25 | 1 | Low factor levels |

| M | 30 | 5 | 0.5 | 0.5 | 25 | Medium factor levels |

| H | 50 | 10 | 0.75 | 0.75 | 50 | High factor levels |

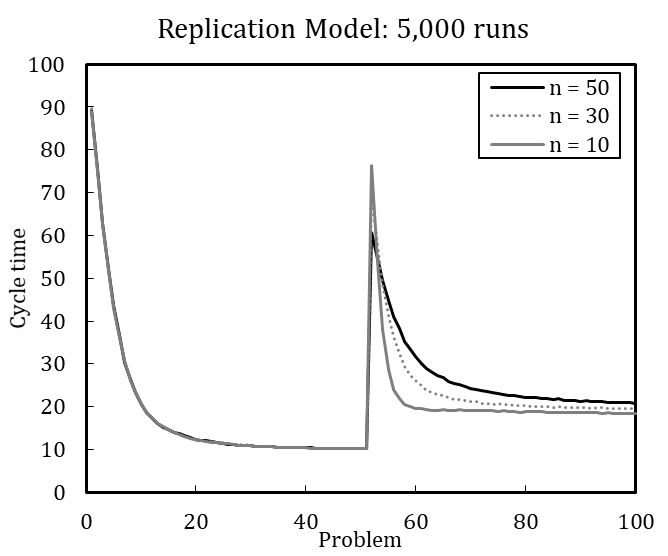

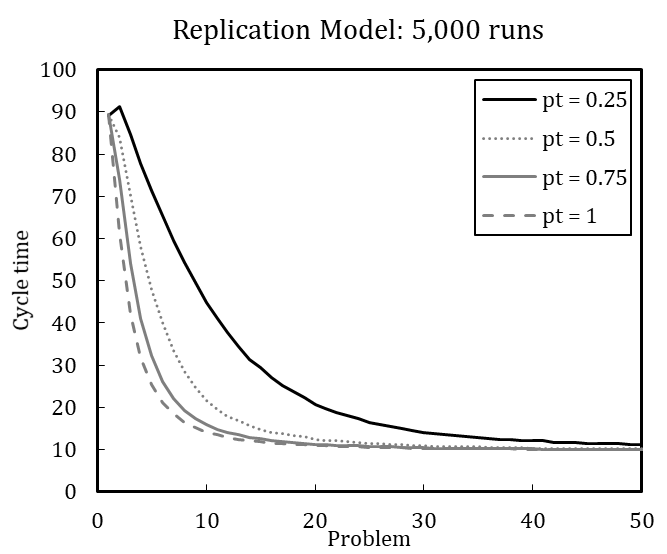

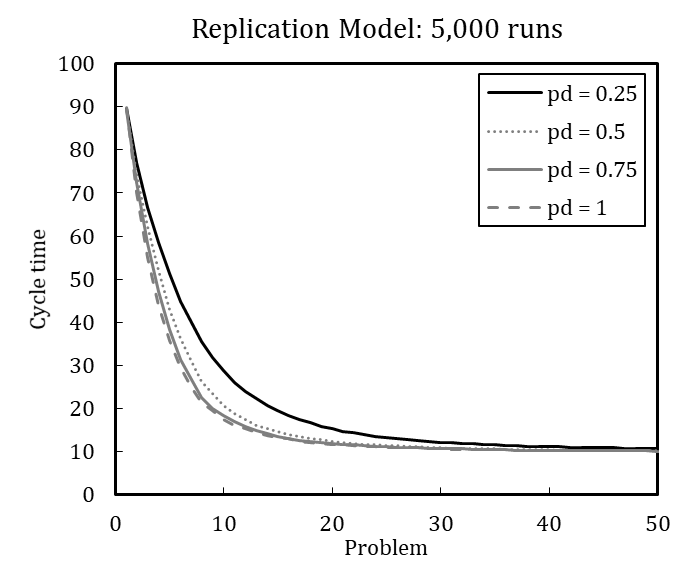

Table 4 shows the error variance matrix with mean values and coefficients of variation for design point M (for the full error variance matrix, see Appendix C). We measured cycle time at five selected steps during the simulation runs, namely when the problems (P) 1, 25, 51, 75, and 100 are solved, to account for the dynamic characteristic of the dependent variable.[15] The coefficient of variation (\(c_v\)) is calculated as the standard deviation (\(\sigma\)) divided by the arithmetic mean (\(\mu\)) of a specific number of runs (Lorscheid et al. 2012). The cycle times in Table 4 result from different number of simulation runs ranging between 10 and 10,000. The coefficients of variation stabilize with increasing number of runs at about 5,000 runs; the mean values and coefficients of variation change only slightly from 5,000 to 10,000 runs. We therefore conclude that 5,000 runs are sufficient to produce robust results. [16]

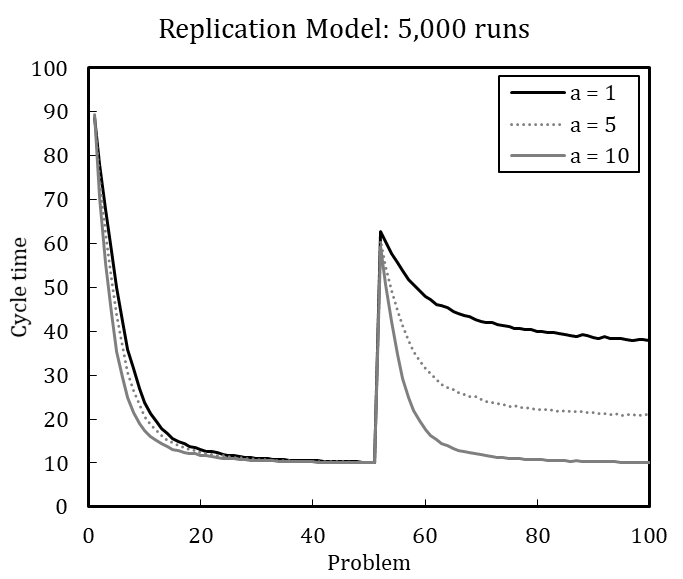

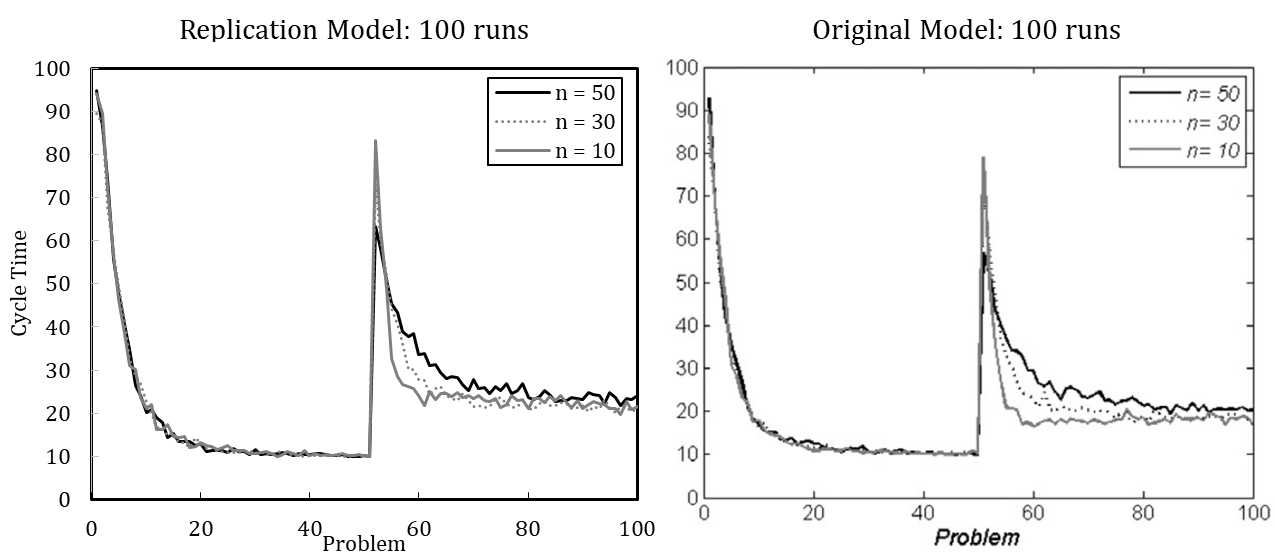

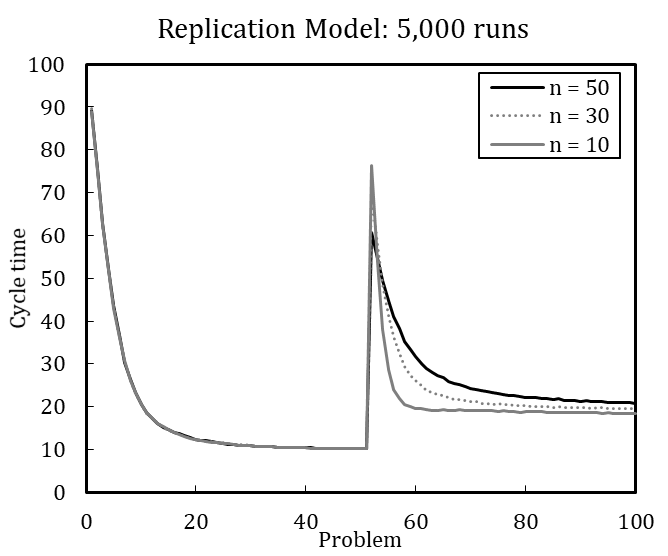

With significant error variance detected for 100 simulation runs, results averaged over 100 runs or fewer should be carefully interpreted. Regarding the cycle time for the 25th problem, the coefficient of variation is 0.14 for 100 runs and 0.20 for 5,000 runs, which is a considerable difference. Visual comparison of experimental results based on 100 averaged runs is thus imprecise and error-prone compared to a comparison based on 5,000 simulation runs.

| Design points and dependent variable | Number of runs | |||||||

| 10 | 50 | 100 | 500 | 1000 | 5000 | 10000 | ||

| Cycle time (P1) | \(\mu\) | 95.80 | 89.44 | 88.19 | 90.15 | 88.61 | 87.98 | 87.86 |

| \(c_v\) | 0.24 | 0.29 | 0.32 | 0.30 | 0.31 | 0.31 | 0.31 | |

| Cycle time (P25) | \(\mu\) | 10.00 | 10.40 | 10.26 | 10.30 | 10.39 | 10.29 | 10.27 |

| \(c_v\) | 0.00 | 0.19 | 0.14 | 0.19 | 0.22 | 0.20 | 0.20 | |

| Cycle time (P51) | \(\mu\) | 59.50 | 59.04 | 57.36 | 58.82 | 59.00 | 58.43 | 58.32 |

| \(c_v\) | 0.31 | 0.28 | 0.31 | 0.32 | 0.33 | 0.32 | 0.32 | |

| Cycle time (P75) | \(\mu\) | 30.30 | 30.32 | 28.98 | 28.00 | 28.42 | 28.63 | 28.49 |

| \(c_v\) | 0.22 | 0.34 | 0.35 | 0.36 | 0.39 | 0.38 | 0.38 | |

| Cycle time (P100) | \(\mu\) | 19.20 | 22.08 | 22.77 | 23.03 | 23.14 | 22.97 | 22.95 |

| \(c_v\) | 0.25 | 0.46 | 0.43 | 0.42 | 0.41 | 0.41 | 0.41 | |

A high number of simulation runs also confirm the expected values for cycle time as determined analytically (see Appendix C), which offers further evidence that the conceptual model is implemented correctly. The analytically calculated cycle time for the first problem-solving instance (P1) of the medium-sized organization (n = 30) is 88.00, and the simulated average cycle time over 10,000 runs is close to this at 87.86. Such an approximate “numerical identity” is also found for a small organization (n = 10), with expected and simulated cycle times of 82.00 and 81.62, respectively, and for a large organization (n = 50), with anticipated and simulated cycle times of 89.20 and 89.52, respectively (see Appendix C).

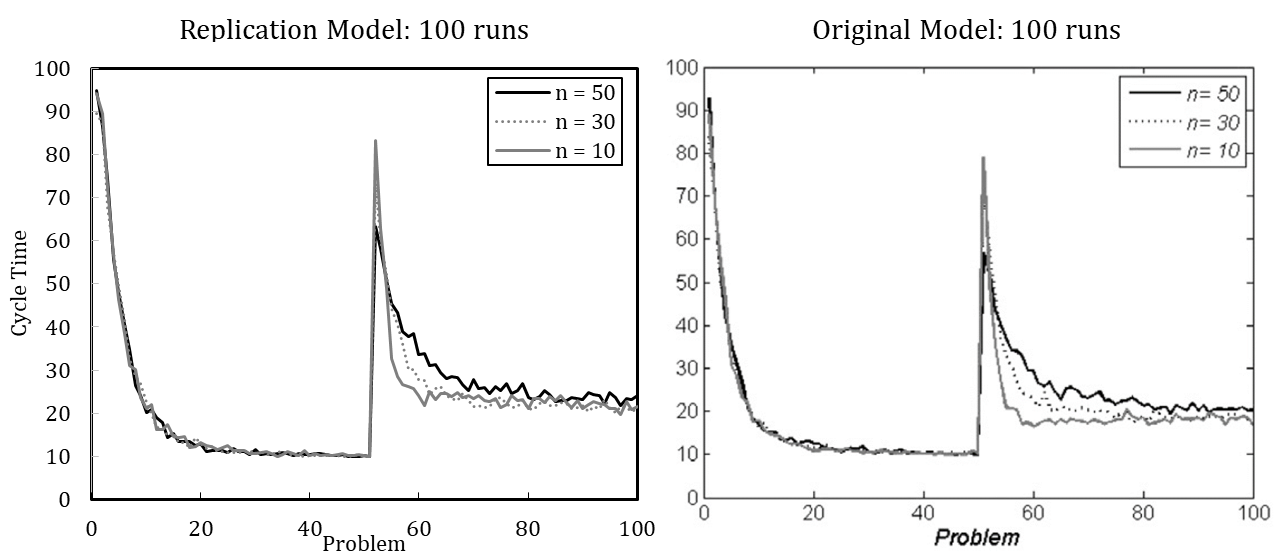

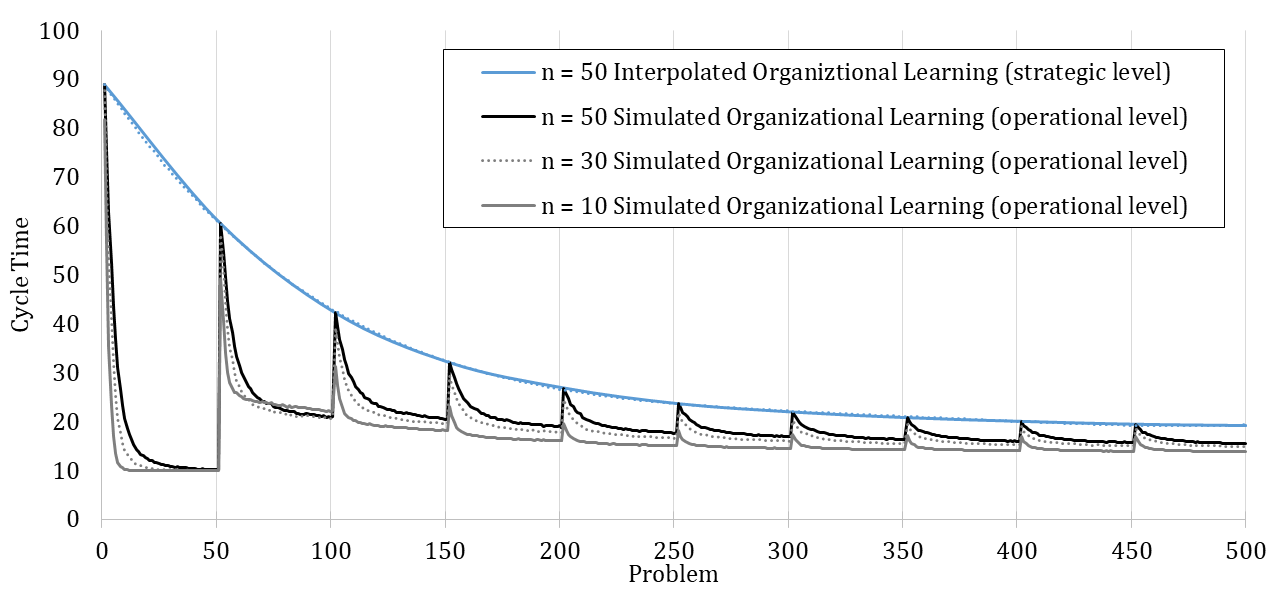

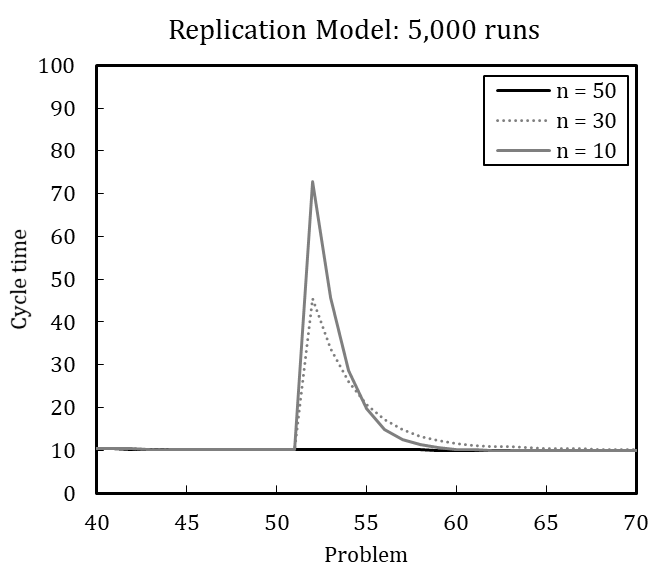

To illustrate the value of defining the number of runs based on the coefficient of variation, we offer the following example. Miller et al. (2012) model in their final experiment an external change to and simultaneous downsizing of an organization; downsizing is thus modeled as a response to external change. The organization faces a changed problem once the 50th recurrent problem is solved. At the same time, the organization is downsized from (\(n\) = 50) to (\(n\) = 30) and from (\(n\) = 50) to (\(n\) = 10) agents.

Figure 3 shows the considerable increase in cycle time after simultaneous problem change and downsizing. In terms of cycle time, the organization that continuously operates with 50 agents peaks at 63, whereas the downsized organization of 30 members peaks at 73, and the downsized organization with ten members peaks at 83. Hence, downsizing initially interferes with organizational performance (see also Miller et al. 2012). The organization lost experienced members and their crucial knowledge for coordinating activities.

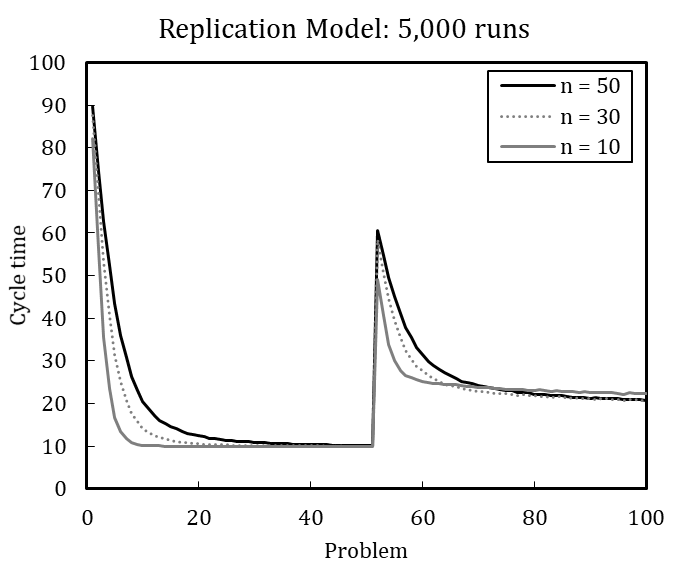

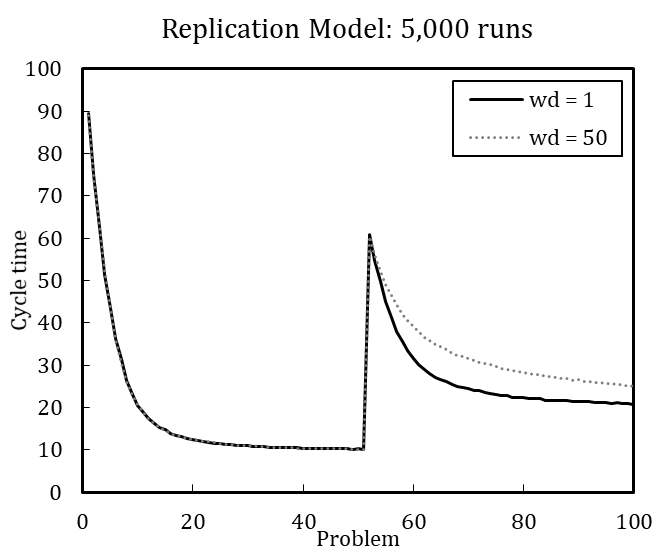

Although the averaged results of 100 simulation runs suggest that downsized organizations potentially learn more quickly in the new situation, no reliable statement can be made about which organization performs better after the change. [17] An increased number of runs enables more detailed interpretation (see Figure 4). The heavily downsized organization with only ten remaining members shows the highest performance after the change. At first, the heavily downsized organization performs worst, but learns much faster to handle the new situation. Still, none of the organizations regain optimal performance. This suggests that smaller organizations are more agile in creating a new knowledge network among agents.

In line with this example, we have replicated each experiment of Miller et al. (2012) with 100 runs and with 5,000 runs (see Appendix B). The results, while qualitatively identical, nevertheless slightly differ quantitatively, which is likely driven by stochasticity. Based on the qualitative equivalence of the results, especially regarding the patterns in behavior after problem changes and downsizing, we conclude that the original model and our replication have identical assumptions.[18]

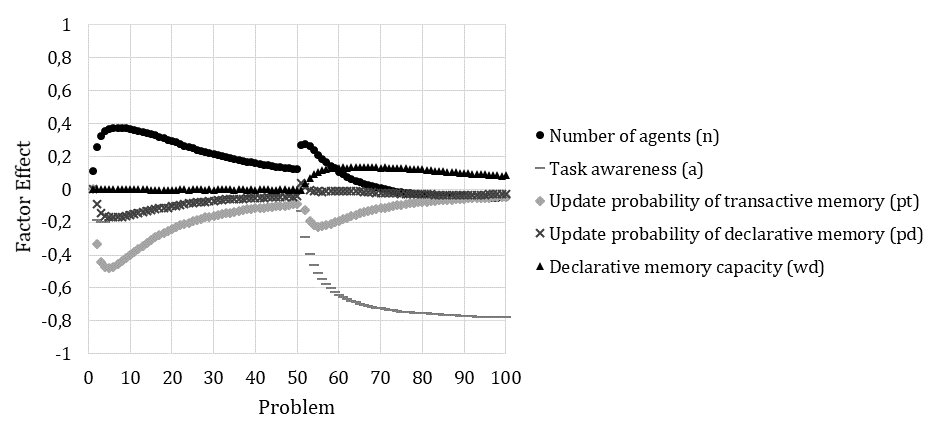

The simulation results show high variance derived from model stochasticity (for a detailed analysis, see Appendix C). We defined the coefficient of variation to improve our understanding of the model’s behavior and assess the precision of both our results and those as published by Miller et al. (2012). Calculation of effect sizes and interaction effects (see Appendix C) further deepened our understanding of the model’s behavior, offering still further evidence that both models behave identical.

Overall, applying DOE principles enabled us to analyze the model’s behavior systematically. For evaluating the replicated model, we found it crucial to determine the number of runs and understand stochastically induced variance. The replicated model produces quantitatively similar and qualitatively identical results. According to the classification of Axelrod (1997), the results are “relationally equivalent” and hint overall at “distributional equivalence” once error variance is taken into account. Hence, we conclude the model is replicated successfully.

Developing Theory with the Replicated Model

The following offers an example of how modest model extensions and in-depth analyses of simulation results can help consolidate insights and advance the understanding of vaguely specified concepts to develop theory. In a commonly used definition by Davis et al. (2007), theory comprises four elements:

Constructs, propositions that link those constructs together, logical arguments that explain the underlying theoretical rationale for the propositions, and assumptions that define the scope or boundary conditions of the theory. Consistent with these views, we define theory as consisting of constructs linked together by propositions that have an underlying, coherent logic and related assumptions.

Miller et al. (2012) address the theory of routines by Feldman & Pentland (2003), which states a reciprocal relationship between the performative and ostensive aspects of routines. The interaction between these two aspects, however, is only vaguely understood, with only partial empirical grounding (Biesenthal et al. 2019). Formal modeling provides the means to investigate underlying mechanisms by operationalizing theoretical constructs. In this respect, Miller et al. (2012) operationalize the dynamic interdependence of actions and memory distributed across an organization. In their computational representation, routines’ ostensive aspect is constructed via three types of memory residing in individuals distributed across the organization. As individuals draw on their memory to solve incoming problem sequences, the performative aspect of routines is made observable.

Davis et al. (2007) suggested a roadmap for developing theory using simulations, including the vital step of experimentation given the traditional strengths of a simulation: testing in a safe environment, low costs to explore experimental settings, and high experimental precision. New theoretical insights may be thereby generated by unpacking or varying the value of constructs, modifying assumptions, or adding new features to the computational representation.

We proceed from our successful replication to this crucial step of experimentation, developing theory in the following three ways: extension, in-depth analysis, and theoretical connection. First, we extend the model by exploring a merger in addition to the downsizing analyzed in the original study. By adding another scenario of external change, we extend the scope or boundary conditions and therefore further generalize the theory. Second, we analyze the model more deeply to show how an initial problem leads to a traceable path dependency in routine formation, gaining nuance on how memory functions affect the formation of routines. We thus unpack the theoretical constructs analytically rather than representationally. Third and finally, we elucidate connections to dynamic capabilities, taking our new insights back to the literature to look for intertwined processes not previously considered. In brief, we uncover the path dependency of routines (Vergne & Durand 2010), look for related theory, identify the concept of dynamic capabilities, and extend the experiment to investigate this concept in more detail.

The model simulates organizational routines, which Feldman & Pentland (2003, p. 2) define as “repetitive, recognizable patterns of interdependent action, involving multiple actors.” Feldman & Pentland (2003) conceptualized routines as adhering to recursively connected performative and ostensive aspects, [19] which helps explain the mechanisms of stability and change. [20] The ostensive aspect embodies the abstract, stable idea of a routine, while the performative aspect embodies the patterns of action individuals perform at specific times and places (Feldman & Pentland 2003).

Hodgson (2008) suggested defining routines as capabilities because of their inherent potential. The capabilities they generate are innate to organizations’ ambidextrous capabilities to balance the exploitation of existent competencies with the exploration of new opportunities (Carayannis et al. 2017). On the one hand, organizational performance is contingent on exploration so that the organization can remain competitive in the face of changing demands. On the other hand, organizational performance is contingent on the capability to exploit resources and knowledge. The latter type of performance can be measured in terms of efficiency, that is, a re- duction in cycle time by drawing on past experience (Lubatkin et al. 2006). Ambidexterity is usually related to fundamental measures of success such as firm survival, resistance to crises, and corporate reputation (Raisch et al. 2009).

Organizations’ ability to operate in a specific environmental setting is determined by the suitability of their routine portfolios (Aggarwal et al. 2017; Nelson & Winter 1982). Routines facilitate efficiency, stability, robustness, and resilience (Feldman & Rafaeli 2002); innovation (Carayannis et al. 2017); and variation, flexibility, and adaptability (Farjoun 2010). An underlying assumption is that organizations achieve optimal performance by finding appropriate responses to changes in the environment. Hence, organizations aim to align external problems with internal problem-solving procedures so they may respond adequately to their environment and maintain equilibrium between internal (organizational) and external (environmental) aspects (Roh et al. 2017).

Generalizing theory: Routine disruptions when organizations merge

Besides downsizing—which Miller et al. (2012) studied, as mentioned above—mergers are another frequent activity by which organizations respond to external changes (Andrade et al. 2001; Bena & Li 2014). Because mergers require the integration of new personnel, human resource issues are critical, but the literature on mergers and acquisitions often neglects this aspect (Sarala et al. 2016). Therefore, to complement the experimental results of Miller et al. (2012) concerning downsizing, we investigate a merger scenario to generalize the understanding of routine disruptions.

Organizations comprise personnel with different experiences, which, as indicated by previous results, are crucial to form routines. Thus, we expect that integrating new staff, whether experienced or inexperienced, affects post-merger routine performance. We model untrained employees as agents with empty declarative memory (a) and model experienced employees as agents with randomly replenished declarative memories (b), thereby assuming that agents have some operational knowledge. [21] Figure 5 depicts organizational performance under different post-merger processes of routine formation. The following analysis models the merger activity as an organization’s response to an external shock, as reflected by a change in problem.

Case 1 represents an organization that integrates new personnel in stable environmental conditions. This integration initially disrupts the original routines whether the new personnel members are inexperienced (a) or experienced (b), which negatively affects organizational performance in a similar pattern as downsizing, albeit less intensively (see Appendix B). The integration of inexperienced personnel (Case 1a) allows organizations to form new routines with optimal performance, suggesting that the new staff adopt the lived routines. In contrast, the integration of experienced personnel (Case 1b) results in lower organizational performance, even in the long run; the new staff does not completely unlearn obsolete sequences of task accomplishment. [22]

Case 2 represents an organization that integrates new personnel in response to an external shock, as reflected by a problem change. The change and simultaneous integration of new personnel force the organization to learn new routines. The learning curves of merged organizations are quite similar to those of downsized organizations (see Appendix B). Organizations with new, inexperienced personnel (Case 2a) perform worse, suggesting that the new staff is not well integrated; organizational behavior is predominantly determined by core personnel (n = 10). On the other hand, organizations integrating experienced personnel (Case 2b) can form routines that result in optimal performance.

We can now generalize that mergers and downsized organizations show similar patterns in organizational performance (see Appendix B); both involve disrupted routines. Comparison between Cases 1 and 2 shows the conditions under which merging organizations can develop efficient routines. The finding that mergers can initially decay adherence to routine agrees with empirical results (see, e.g. Anand et al. 2012). Moreover, the literature on successful mergers highlights the importance of forming new, high-order routines that can resist blocking effects from existing routines; successful mergers can then, afterward, realize radical innovations (Heimeriks et al. 2012; Lin et al. 2017). In other words, the success of a merger depends on individuals’ experience, as this affects whether lived routines can be maintained and whether new efficient routines can be formed.

In conclusion, organizations that downsize or merge as a response to an external shock stimulate the formation of new routines. We found that both downsizing and merging initially reinforce the disruption of established routines. Loss of organizational knowledge initially reduces performance in downsized organizations, but such organizations quickly form new, efficient routines. In a complementary finding, Brauer & Laamanen (2014) found that the pressure of downsizing on the remaining individuals forces them to engage in path-breaking cognitive efforts that can lead to better results than the repair of routines by drawing on experience. In a further generalization of the ideas Miller et al. (2012) presented, we conclude that the routines of organizations are similarly affected when organizations downsize or merge in response to an external shock.

Deeper analysis: Routine persistence in organizations facing volatility

If routines are a recurrent pattern of actions, the question remains which patterns can emerge. An appropriate organizational routine matches the task sequence of the problem at hand. Some less efficient organizations, however, struggle to coordinate their activities with the problem. In particular, inappropriate behavior by agents might create unnecessary activity.

To explore the link between the behavior of individuals and emerging routines, we performed an experiment in which an organization again begins by facing 50 recurrent problems. The organization thereby has the chance to form a routine. Thereafter, it faces 50 different problems, each characterized by a new, randomly shuffled task sequence[23]. Hence, the modeled organization must adapt to multiple, distinct problems. At the end of the simulation, in the 100th problem-solving instance, we measure the frequency of emerging patterns of actions to investigate whether the organization has unlearned the routine, initially developed over the first 50 problems, that has since become obsolete.

Table 5 shows the frequencies of subsequently performed tasks by the organization. The matrix contains the relative frequency of performed actions as measured on the 100th problem-solving instance of a simulation, averaged over 5,000 runs. The actions that the organization performs to solve the generated problems comprise necessary (73%) and unnecessary actions (23%). Most combinations of subsequent, accomplished tasks occur similarly often, with a probability of around 1%. However, a few interdependent actions have a likelihood of emerging around 2%. These correspond to the subsequent, ordered tasks of the initial problem.

| Subsequent performed task | |||||||||||

| Performed task | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

| 0 | 0.00 | 2.97 | 1.13 | 0.79 | 0.63 | 0.66 | 0.57 | 0.59 | 0.61 | 0.54 | |

| 1 | 0.69 | 0.00 | 2.85 | 1.40 | 1.07 | 1.05 | 1.00 | 0.95 | 0.91 | 0.91 | |

| 2 | 0.97 | 0.72 | 0.00 | 2.55 | 1.36 | 1.07 | 1.09 | 1.04 | 1.04 | 0.99 | |

| 3 | 0.97 | 0.95 | 0.79 | 0.00 | 2.48 | 1.29 | 1.11 | 1.06 | 0.99 | 0.96 | |

| 4 | 1.07 | 0.99 | 0.91 | 0.78 | 0.00 | 2.26 | 1.27 | 1.14 | 1.03 | 0.98 | |

| 5 | 0.98 | 1.01 | 1.03 | 0.92 | 0.79 | 0.00 | 2.06 | 1.23 | 1.03 | 0.96 | |

| 6 | 0.91 | 1.03 | 0.99 | 1.00 | 0.86 | 0.78 | 0.00 | 2.07 | 1.18 | 0.97 | |

| 7 | 0.97 | 0.98 | 0.94 | 1.00 | 0.99 | 0.90 | 0.74 | 0.00 | 2.07 | 1.20 | |

| 8 | 0.96 | 1.05 | 1.00 | 1.01 | 1.04 | 0.91 | 0.91 | 0.71 | 0.00 | 2.00 | |

| 9 | 1.00 | 1.14 | 1.17 | 1.15 | 1.14 | 1.14 | 1.09 | 1.03 | 0.75 | 0.00 | |

The initially learned routine (to solve the recurrent problems numbered 1 to 50) persists. Although the organization copes more recently with diverse situations (random problems 51 to 100), the prior, learned behavior of the organization remains traceable. This persistence of organizational behavior matches the detected behavior of individuals (see Appendix E). Individuals and the organization maintain obsolete knowledge, implying that an organization’s past pattern of action partially persists. Recurrent patterns of interdependent actions reduce organizational performance if these actions do not match the situation at hand. Developed routines can be detrimental when an organization faces change.

The development of organizational capabilities in terms of routines is path dependent (Aggarwal et al. 2017). The results of a similarly designed experiment offer further support. When the organization exclusively copes with different problems, the original action pattern remains traceable (see Appendix F). Therefore, one might consider this development of organizational capabilities to be path dependent. This is in line with some scholars position, portraying routines as organizational dispositions or even genes.[25] However, conceptualizing routines as dispositions is untenable, because other factors, such as individuals’ high task awareness, can prevent the persistence of routines (see Appendix C).

Refining simple theory: Dynamic capabilities

If processes of knowledge integration could provide micro-foundations for dynamic capabilities, the model of Miller et al. (2012) resembles knowledge-integration routines, conceptualizing an individual’s memory as three different types or functions. The distinct properties of an agent’s memory function correspond to distributed, specialized knowledge in a firm. To solve collective problems, agents coordinate their actions based on their memory functions. The ability to learn from previous actions leads to the development of routines with recurring properties for problem-solving, with the formation and performance of these routines affected by distinct properties of individual’s memory.

We found that an initial problem leads to traceable path dependency in the routine-formation process, which prevents an organization from again reaching initially achieved cycle times after an external shock and thereby constituting a natural limitation on dynamic capabilities. This newly gained insight motivates a closer investigation of the effects of such path dependencies on dynamic capabilities, using our replicated model.

The model enables interpretations from an operational and strategic perspective. On an operational level, a change in problem decreases organizational performance because established working procedures become obsolete and forming new routines requires search costs. This consideration is short term, however. On a strategic level, organizations that face environmental changes have the opportunity to learn; in the long run, the experience thus gained might improve their capability to handle such changes.

In Figure 6 an organization learns sequentially over ten different problems with 50 problem instances each, highlighting the organization’s performance on both levels. The individuals in the organization search for new paths to adapt their activities to new situations induced by the problem changes. The organization thereby develops operational capabilities to reduce the cycle time between problem changes and gains a dynamic capability over the long run to manage external changes.

The organization’s dynamic capability emerges from the cognitive properties of individuals.[26] The development of such dynamic capabilities has, according to the model design, two prerequisites. First, individuals can revise their declarative memories so that they can change their learned problem-solving sequence. Second, the internal staffing structures of the organization are non-rigid. The more individuals are forced to search for new paths to solve problems, the more likely they are to search for and randomly meet other individuals. This yields an experienced organization comprising members who know each other very well. The organization exploits this knowledge when it faces a change. Modeled here is an ambidextrous organization that can both exploit acquired knowledge and explore new paths.

Organizations that recurrently encounter external changes develop dynamic capabilities that enable them to handle changes in an experienced manner, which enhances their operational performance during crisis-like events. Overall, the simulation offers evidence that organizations can form both dynamic and operational capabilities based on routines formed through individual’s memory functions. In the long run, organizations that regularly form new routines develop dynamic capabilities. Given this result, we hypothesize that even an organization operating in a highly volatile environment can form routines.

Therefore, we model a volatile environment using continuous changes in problem. Figure 7 shows the averaged results over 5,000 simulation runs for three different organizations operating in volatile environments. We set the model parameters to the defaults except for the memory update probabilities of individuals. The organization without memory (\(p_t =\) 0 and \(p_d =\) 0) is unable to learn and solves problems exclusively through random search, which results in consistently poor performance over time. The simulated cycle time is approximately 89.20, which tracks the analytically determined cycle time (see Appendix D). The organization with transactive and declarative memory (\(p_t =\) 0.5 and \(p_d =\) 0.5) can learn and performs better over the long run. The organization with transactive memory but without declarative memory (\(p_t =\) 0.5 and \(p_d =\) 0.0) shows, in the long run, the best operational performance in the volatile environment.

The results suggest that organizations can learn and form routines, even in volatile environments. Routines may be flexibly enacted based on organizational experience through mechanisms that can be explained by incorporating the previous findings.

Transactive memory allows agents to learn about the skills of their colleagues, implementing a network for who knows what. Continuously changing problems force agents to coordinate to accomplish tasks, which teaches agents about the skills of multiple colleagues. Agents in charge of but not skilled at or aware of a task draw on their personally developed networks.[27] Most agents, by gaining experience over time, develop such networks, which are interrelated. They allow the organization to retrieve distributed knowledge and flexibly coordinate whichever activities are appropriate to the current situation. [28]

Agents’ declarative memory negatively affects organizational performance in the midst of volatility, standing in contrast to its positive effect in stable environments. Besides their personal networks, agents’ actions also result from their learned problem-solving sequence, which becomes inappropriate when tasks change. The resulting behavior is then detrimental to organizational performance and perturbs the formation of efficient routines.

In summary, individuals’ learning capabilities enable organizations to form efficient (meta)routines, independent of environmental conditions. The performance of organizations in terms of learning varies with the type of memory combined with the type of environment. The particular effect of transactive memory was highlighted in a follow-up study by Miller et al. (2014), which applied a similar model design. Investigating organizations operating in volatile environments, we found that individuals’ transactive memory enables organizations to develop dynamic capabilities, while their declarative memory can weaken that effect.

Overall, our results show that individual and organizational learning are antecedents of the development of both routines and dynamic capabilities in organizations, as Argote (2011) had postulated. Individuals in an organization learn problem-solving sequences and apply their knowledge, which is a prerequisite for the formation of routines. This positively affects organizational performance as long as the organization operates in a stable environment. However, a learned problem-solving sequence is detrimental to organizational performance when conditions change, although this detrimental effect is not necessarily linear, because interactions among individuals can compensate for some problem-inappropriate behavior.

Routines are related to the concepts of cognitive efficiency and the complexity of problem-solving processes (Feldman & Pentland 2003), but existing literature has not examined whether environmental shocks and volatility counter the cognitive efficiency generated by organizational routines (Billinger et al. 2014). Using the replicated model, we demonstrated that organizations can form routines while operating in volatile environments. When problems change frequently or continuously, such (meta)routines are not detectable merely based on observable patterns of action.

Conclusion

This paper used a replication of a simulation model, namely that of Miller et al. (2012), to develop theory, and demonstrated the benefit of using standards, such as ODD and DOE, in the replication process. Our replicated model produces quantitatively similar and qualitatively identical results that are “relationally equivalent” and hint overall at “distributional equivalence,” following the classification of Axelrod (1997).

Replications of simulation models must rely on published conceptual model descriptions, which are often not straightforward (Will & Hegselmann 2008), even for a relatively simple model, as was the case here. The use of the ODD protocol fosters a full model description through its sophisticated, standardized structure. It is an explicit intermediate result that provides a steppingstone in the replication process (Thiele & Grimm 2015). Transferring the original model description published by Miller et al. (2012) into the ODD format helped to identify formally ambiguous assumptions that we subsequently clarified during pretests with the re-implemented model.

The application of DOE principles was also helpful in several respects. The original model results were unavailable as raw data, presented mainly graphically, averaged over 100 simulation runs, and subject to stochastic influences. Using the DOE principles suggested by Lorscheid et al. (2012), we quantified statistical errors to determine 5,000 simulation runs as an appropriate number enabling reliable visual comparison of graphically depicted outputs. The results of the replicated model generated on this basis match those highlighted by Miller et al. (2012). Hence, we primarily exclude errors due to stochasticity in the replicated results. Moreover, the application of the DOE principles yielded insight into model behavior and validated simulation results against the conceptual model. Analyses of the original code further increased the credibility of the replication.

Our successfully replicated and then verified model offered a solid foundation for further extensions and experiments to develop and refine theory. First, we generalized previous theoretical insights by investigating a merger scenario in addition to the downsizing scenario examined in the original paper, finding a similar qualitative pattern for both. Either disrupts an organization’s established routines, initially reducing performance due to lost organizational knowledge, but organizations can quickly form new, efficient routines. Second, we illustrate how replicated simulation models may be used to refine theory, such as analyzing in-depth the relationship between memory functions and the performance of routines. In this respect we show that initially learned routines persist, locating their path dependence in the memory functions of individuals. Progressing from this finding, new experiments with multiple problem changes allow us to clarify and formally specify a potential mechanism (Smaldino et al. 2015) underlying the still actively debated theoretical concept of dynamic capabilities. Here, given the longitudinal and processual character of the concept, as well as the fact that empirical data are challenging to obtain, simulations offer comparative methodological advantages (Davis et al. 2007). Table 6 gives a summary of how we develop theory with the replicated model.

| Theory | Miller et al. | Replication | Result | Theory development |

| Organizational routines | ||||

| Organizational downsizing scenarios | Organizational merger scenarios | Downsized and merged organizations show similar disrupted performance patterns (=new boundary condition) | Generalization of theory through its extended scope | |

| Routine formation and performance measured by cycle time | More in-depth analysis of developed action patterns and path dependencies | Organizational inertia results from the persistence of few initial learned problem-solving patterns (=path dependency) | Theory refinement via specification of the mechanism of how memory functions affect routine formation | |

| Dynamic capabilities | ||||

| Operational (short-term) performance of organizations facing one crisis event (one problem change) | Strategic (long-term) performance of organizations facing a volatile environment (multiple problem changes) | Distinct understanding of the formation of operational and strategic capabilities of organizations | Conceptualization of routines in context of dynamic capabilities. Theory refinement by deconstructing knowledge routines | |

Some limitations exist, as well. We document the benefits of using the ODD protocol and DOE principles with respect to a replication endeavor. Also, as discussed above, we used quite a large number of runs to obtain stable results. The model’s abstract design enables general interpretations, but its assumptions have not been validated empirically. Moreover, we investigate dynamic capabilities with respect to knowledge integration, but the foundations of the concept of dynamic capabilities are not restricted to this respect. Nevertheless, the agent-based model depicts a potential fundamental mechanism for routine formation and what affects their performance.

The model suggests promising directions to explore in future research on organizational routines. First, the performance of routines that organizations enact to handle volatility could be empirically investigated. Second, regarding model design, future research could test additional submodels. For example, agents’ search could be modeled as an urn model without replacement, which would reduce organizations’ search costs and cycle times. Third, regarding the use of the ODD protocol and DOE principles in model replications, we suggest further testing of these standards in future replication studies to more broadly establish their benefits.

Acknowledgements

We would like to thank the anonymous reviewers for their valuable comments and suggestions that helped us to enhance the quality of the article. This publication was supported by funding program “Open Access Publishing” of Hamburg University of Technology (TUHH).Notes

- One could argue that simulation models that are not independently replicated have only marginal scientific value due to their prototype character.

- Procedural memory reflects agents’ “know how,” declarative memory reflects their knowledge of “what to do,” and transactive memory reflects “who knows what” (for a comprehensive description of the three memory concepts of routines see Miller et al. (2012, p. 1539). Agents draw information from their memory to perform routines.

- Based on Google Scholar citations through June 2019. Another model — Pentland et al. (2012) — has 338 citations, but it is not agent-based (Kahl & Meyer 2016). For a more recent agent-based model of routines, see Gao et al. (2018).

- The studied conceptual model is, in principle, generic, shifting the focus to verification of the fit between the conceptual and implemented models. In explaining their assumptions, the authors refer briefly to the example of a medical service unit (see Miller et al. 2012, p. 1542), but their conceptual model can represent diverse organizational settings because of its design at a high level of abstraction.

- One incentive to replicate agent-based models was the Volterra Replication Prize, but the prize has not been awarded since 2009 (http://cress.soc.surrey.ac.uk/essa2009/volterraPrize.php).

- However, we want to recognize the trend within the ABM community to make models and accompanying data fully available online (Hauke et al. 2017; Janssen 2017). Therefore, setting an example for good scientific practice in comparison to other disciplines, where transparent data sharing is often still lacking.

- To our surprise, the authorsdo not mention agent-based modeling among the listed simulation approaches, which is perhaps why they fail to highlight implications of the strength of the Keep It Descriptive, Stupid (KIDS) approach — handling social complexity in connection with theory development (Edmonds & Moss 1984) — in favor of the Keep It Simple, Stupid (KISS) approach.

- We acknowledge that such a view on theory is not uncontroversial. However, the discussion of what makes a theory unsettles philosophy of science until today. We see their concept of simple theory as a useful substantiation of the to be developed building blocks of a theory: “Constructs, propositions that link those constructs together, logical arguments that explain the underlying theoretical rationale for the propositions, and assumptions that define the scope or boundary conditions of the theory” (Davis et al. 2007). Explicitly addressing these building blocks supports the process of theory development as an evolutionary process (Weick 1989; Whetten 1989). As such, it might be understood as a theory under construction.

- For an assessment of the concept of dynamic capabilities as a theory, see Denrell & Powell (2016).

- We also screened the MATLAB code of the original model for anomalies and misspecifications.

- The replicated model code can be found at (https://www.comses.net/codebase-release/ua01596-e2cd-4979- 96c4-b1ad2ce9ac23/) (file name: Dynamics of Organizational Routines: A Model Replication).

- If skills are not approximately distributed uniformly among agents, this can lead to different results, as highlighted in the original study.

- Cycle time does not increase when an agent scrutinizes or hands off a task to another agent who accepts the problem. This simplifying assumption implies that scrutinizing tasks and handoffs requires no effort.

- Miller et al. (2012) provided no information regarding how they chose the number of simulation runs or regarding the coefficient of variation.

- Recall that the problem changes after the organization solves the fihieth problem.

- We acknowledge that this number of runs is rather high. In this study, we aimed to obtain particularly stable results to enable visual comparison with the original graphs. See, in this respect, discussions regarding Figures 4 and Figure 5. More recent approaches to determining the appropriate number of runs adopt a power analysis framework (Secchi & Seri 2017), which supports an argument for fewer simulation runs. As a matter of fact, Secchi & Seri (2017) concluded that the original simulation experiments with the model are overpowered, while the majority of investigated papers in their review lacked sufficient model runs and are therefore underpowered. Having too many runs poses the risk, besides the added computational costs, that economically insignificant results become statistically significant. For this reason, we argue that effect sizes should be considered to distinguish between economic and statistical significance. For a discussion of the problems of over- and underpowered simulation experiments, including issues with Error Type II, see Secchi & Seri (2017).

- Another advantage of having a replicated model is that we can now calculate the respective effect sizes. We calculated Cohen’s d for the relevant effects; they fall in the range assumed by Secchi & Seri (2017).

- Moreover, after we completed the replication we examined the code provided by Miller et al. (2012). The basic processes correspond to the flow chart depicted in Figure 1, and key model elements were implemented the same, conceptually, as in our replicated model.

- Miller et al. (2012) denominate these aspects as performative and ostensive routines.

- The term “organization” also refers to organizations within organizations; that is, departments are suborganizations within firms.

- Knowledge can represent experience gained in another organization or acquired in training. For example, an employee might learn to follow a new procedure that does not correspond to the previously lived routine.

- In Germany, in line with this result, Tesla avoids recruiting experienced personnel from the automotive industry (according to a personal conversation with one of the authors).

- The random shuffling of tasks in a sequence of \(k\) tasks allows the generation of \(k!\) distinct problems, or with 10 tasks, as in the experiment here, 10! = 3,628,800. An identical sequence is unlikely to reoccur.

- The occurrence probability that tasks are immediately repeated is very low. Agents with a misleading notion of what to do can get stuck in loops in which the problem is passed between agents. Such loops are broken in the model. Therefore, we exclude entries on the matrix diagonal for calculating the occurrence probabilities.

- For example, a new company might develop a particular behavior in its start-up phase. This behavior be- comes the company’s disposition (firm culture). The company might act according to this disposition even years later.

- The strategic learning curve can be approximated by a polynomial function of the fourth degree: \( y = 4e^{-09}x^4 - 5e^{-06}x^3 + 0.0028x^2 – 0.6979x + 89.655; R^2 = 0.99 \).

- An extended explanation according to the model design follows: An agent that is aware of a task performs it. Otherwise, the agent searches for help from another agent. The approached agent is likely to have different task awareness. Thus, both agents taken together are, with a higher probability, aware of what to do. The approached agent also knows other agents and is thus often able to refer the task to an agent that can perform it. A distant (random) search is then unnecessary.

- The formation of such routines depends on organizational size. In volatile environments, small organizations are more agile and form routines faster compared to larger organizations.

- If skills are not approximately distributed uniformly among agents, this can lead to different results.

- In pretests with the replication model, different submodels were tested to clarify ambiguous assumptions.

- Cycle time does not increase when an agent scrutinizes or hands off a task to another agent who accepts the problem. This simplifying assumption implies that scrutinizing tasks and handoffs require no effort.

- One might regard downsizing as an endogenous change.

- Recall that the problem changes after the organization solves the fiftieth problem.

- An averaged calculation of cycle time over several problem-solving instances would deteriorate the informative value of the effect sizes..

- The 35 factorial design and 5,000 repetitions of each simulation run yields 35 × 5,000 = 1,215,000 simulation runs in total.

- No main effect or interaction effects are observable for declarative memory capacity, because the capacity is not varied below the value of 1. Agents are always capable of solving a subsequent task. Moreover, in the initial routine-formation phase, declarative memory contains only correct entries. Therefore, it does not matter how often a correct, subsequent task is stored.

- Moreover, an appropriate number of simulation runs is incorporated to obtain representative results.

- Moreover, transactive memory always indicates correctly who knows what.

- The occurrence probability that tasks are immediately repeated is very low. Agents with a misleading notion of what to do can get stuck in loops in which the problem is passed between agents. Such loops are broken in the model. Therefore, we exclude entries on the matrix diagonal for calculating the occurrence probabilities.

Appendix

A: ODD protocol

Purpose

The model aims to show how the cognitive properties of individuals and their distinct types of memory affect the formation and performance of organizational routines in environments characterized by stability, crisis (see Miller et al. 2012) and volatility.

Entities, state variables, and scales

Entities in the model are agents, representing human individuals. The collective of agents forms an organization. Table 7 reports the model parameters. The global variables are the numbers of agents and tasks. By default, the organization comprises (\(n = 50\)) agents. The organization faces problems from its environment. A problem involves a sequence of (\(k = 10\)) different tasks (Miller et al. 2012). The organization must perform the tasks in a given order to solve a problem; the order of tasks defines the abstract problem in terms of its complete solution process. Once the organization performs each task in the required sequence, the problem is solved (Miller et al. 2012). The organization solves several problems over time, which can either recur or change in terms of the required task sequence. The time an organization requires to solve a problem is defined as cycle time (Miller et al. 2012), which represents organizational performance.

| Variable | Description | Value (Default) |

| n | Number of agents in the organization | 10, 30, 50 |

| k | Number of different tasks in a problem | 10 |

| a | Task awareness of an agent | 1, 5, 10 |

| pt | Probability that an agent updates its transactive memory | 0.25, 0.5, 0.75, 1.00 |

| pd | Probability that an agent updates its declarative memory | 0.25, 0.5, 0.75, 1.00 |

| wd | Declarative memory capacity of an agent | 1, 25, 50 |

Table 7 further defines the individual variables used to set agent behavior. Agents are heterogeneous in terms of skill, but the skills themselves are not varied and are thus not reflected by a variable. Each agent has a particular skill stored in its procedural memory that enables the agent to perform a specific task ( Miller et al. 2012). On the one hand, the number of agents equals at least the number of different tasks in a problem, thus ensuring that an organization can always solve a problem, if the organization can organize the task accomplishment in the defined sequential order. On the other hand, the number of agents can exceed the number of tasks (\(n > k\)) ( Miller et al. 2012). In such cases, the \(k\) different skills are assumed to be uniformly distributed among the agents.[29]

Any agent is aware of \(a\) randomly assigned tasks (Miller et al. 2012). Each agent is aware of a limited number of tasks of a problem (1≤ a ≤ k). An agent’s awareness set contains at least the task for which they are skilled, thus assuming that agents who can perform a specific task are also capable of recognizing this task. Agents are otherwise blind to unfamiliar tasks (Miller et al. 2012).

Declarative memory enables agents to memorize the subsequently assigned task once they have performed their task. Agents have limited declarative memory capacity (wd =1) and memorize a task with a probability set by the variable (\(p_d\) =0.5) (Miller et al. 2012). Further, agents can memorize the skills of other agents in their transactive memory. The number of agents and their skills which each agent can memorize is limited by the number of agents in the organization. The probability that an agent adds an entry to their transactive memory is defined by the parameter (\(p_t\) = 0.5) (Miller et al. 2012).

The agents are distributed across the organization. Scale and distance are not modeled explicitly, but time is crucial in two ways. On an operational dimension, the problem-solving process requires the accomplishment of tasks, as measured by the cycle time. An organization that consecutively solves problems over time might form routines.

Process overview and scheduling

The organization faces consecutive occurring problems. The generated problems trigger organizational activities. Except for the first task of each problem, the agents self-organize the problem-solving processes given the task sequences of the generated problems. The first task in each task sequence is assigned to an agent that is skilled to perform the task. An agent in charge of performing a task in a problem is also responsible for passing the next task in the sequence to another agent. Thus, the agent in charge might remember or must search for another agent that seems capable of handling the next task (Miller et al. 2012). Then, the agent in charge hands the problem over to the identified agent, who then becomes in charge of the problem (Miller et al. 2012).

Figure 8 depicts the schedule that an agent follows when in charge of a problem. An agent first scrutinizes the task. If the agent is aware of and skilled for the task, the agent updates its declarative memory and perform the necessary task. The agent then advances to the next task if the problem has not yet been solved (Miller et al. 2012).

An agent that lacks the skill to perform the task at hand starts a local search process. An agent that is aware of the task but not skilled consults its transactive memory. If the transactive memory reveals another agent skilled to perform the required task, the searching agent tries to hand the task off to this agent. An agent that is unaware of a task consults their declarative memory, which might reveal a task that is usually due. If declarative memory indicates a task (what usually should be done), the agent further consults the transactive memory (of who has the appropriate skill) to hand the task over to a skilled agent. If this local search is unsuccessful or if an agent’s memory is undeveloped, the agent proceeds with a distance search process to handoff the problem (Miller et al. 2012).

Distance search involves a random search for a skilled agent to hand over the problem. If the searching agent finds a skilled agent, the agent updates the respective types of memory and hands off the problem. An approached agent without the skill required for the task of the searching agent might nevertheless be able to make a referral to another agent. In this case, the searching agent hands off the task to the referred agent and updates the transactive and declarative memory (Miller et al. 2012). An unsuccessful search attempt results in a new random search.

As long as the performed task is not last in the problem, an agent advances to the next task of the problem. Once a problem is solved, a new problem is generated and a new problem-solving process is initiated (Miller et al. 2012).

Design concepts

Basic principle

The model design is abstract. Conceptually, it proceeds from the idea that organizational routines form as a result of individuals’ cognitive properties and activities. The model is designed from the perspective of distributed cognition: the individuals are distributed and have distinct properties. The model assumes that individuals self-organize the problem-solving process and adapt their behavior to recurrent or different problems. Individuals can learn, which affects the coordination of activities and organizational performance.

Emergence

Organizational routines emerge from individuals’ initially independent skill sets and capacities (Winter 2013). The micro-foundations on the individual level are thus well-reasoned and explicitly modeled. Organizational macro-behavior is not explicitly modeled. The organizational behavior that emerges from the properties of the individuals is analyzed. The presumed emergent phenomenon is that the modeled organization develops routines over time.

Adaption

The individuals in the organization adapt their activities to recurrent and changing problems. Recurrent problems reflect stable environmental conditions. In this case, the organization adapts to the problem by forming a routine. A crisis event is modeled as a one-time change in problem, which forces the organization to adapt to the new situation and learn a new routine. A volatile environment is modeled as a continuously changing problem, in which the organization has to cope with varying conditions. The organization might even instantiate routines to operate efficiently in such a volatile environment. In terms of the flexible use of action patterns and their adaption to certain situations, routine dynamic can be traced back to individuals (Howard-Grenville 2005). Since individuals perform activities contingent on their situations, routines can be applied flexibly in a volatile environment (Adler et al. 1999; Bogner & Barr 2000).

Objectives

The organizations’ objective is to organize the problem-solving process as efficiently as possible in terms of cycle time. Agents’ primary objective is to perform tasks and to organize the problem-solving process. Overall, agents follow this objective to ensure the completion of all task sequences for each occurring problem.

Learning

Learning is an important design concept. On the individual level, agents have three types of memory: procedural, transactive, and declarative. In procedural memory, agents store their skill (Miller et al. 2012). According to the model design, each agent owns one skill; agents are assumed to have learned the skill in prior training. Agents do not learn new skills; agents are assumed to be specialists in their roles. Agents learn through their transactive and declarative memory. Transactive memory allows the agents to store who knows what in the organization. Declarative memory enables the agents to learn what should usually be done given a problem’s task sequence. On the macro level, the organization can learn to handle problems in a routinized manner.

Prediction