Abstract

Abstract

- The proliferation of agent-based models (ABMs) in recent

decades has motivated model practitioners to improve the transparency,

replicability, and trust in results derived from ABMs. The complexity

of ABMs has risen in stride with advances in computing power and

resources, resulting in larger models with complex interactions and

learning and whose outputs are often high-dimensional and require

sophisticated analytical approaches. Similarly, the increasing use of

data and dynamics in ABMs has further enhanced the complexity of their

outputs. In this article, we offer an overview of the state-of-the-art

approaches in analysing and reporting ABM outputs highlighting

challenges and outstanding issues. In particular, we examine issues

surrounding variance stability (in connection with determination of

appropriate number of runs and hypothesis testing), sensitivity

analysis, spatio-temporal analysis, visualization, and effective

communication of all these to non-technical audiences, such as various

stakeholders.

- Keywords:

- Agent-Based Modelling, Methodologies, Statistical Test, Sensitivity Analysis, Spatio-Temporal Heterogeneity, Visualization

Introduction

Introduction

- 1.1

- Agent-based models (ABMs) have been gaining popularity

across disciplines and have become increasingly sophisticated. The last

two decades have seen excellent examples of ABM applications in a broad

spectrum of disciplines including ecology (Grimm

& Railsback 2005; Thiele

& Grimm 2010), economics (Kirman

1992; Tesfatsion

& Judd 2006), health care (Effken

et al. 2012), sociology (Macy

& Willer 2002; Squazzoni

2012), geography (Brown

& Robinson 2006), anthropology (Axelrod & Hammond 2003),

archaeology (Axtell et al. 2002),

bio-terrorism (Carley et al. 2006),

business (North & Macal 2007),

education (Abrahamson et al. 2007),

medical research (An & Wilensky

2009), military tactics (Ilachinski

2000), neuroscience (Wang et

al. 2008), political science (Epstein

2002), urban development and land use (Brown

et al. 2005), and zoology (Bryson

et al. 2007). This methodology now also penetrates

organizational studies (Carley

& Lee 1998; Lee

& Carley 2004; Chang

& Harrington 2006), governance (Ghorbani et al. 2013), and is

becoming actively employed in psychology and other behavioural studies,

exploiting data from laboratory experiments and surveys (Duffy 2006; Contini et al. 2007; Klingert & Meyer 2012).

- 1.2

- ABMs produce a rich set of multidimensional data on macro

phenomena, comprising a myriad of details on micro-level agent choices

and their dynamic interactions at various temporal and spatial

resolutions. Despite significant progress made in empirically grounding

ABM mechanisms and agent attributes (Robinson

et al. 2007; Windrum et

al. 2007; Smajgl et al.

2011), ABMs continue to harbour a considerable amount of

subjectivity and hence degrees of freedom in the structure and

intensity of agents' interactions, agents' learning and adaptation, and

any potential thresholds affecting switching in strategies. The

increasing complexity of ABMs has been further stimulated by improvements

in computing technology and wider availability of advanced computing

resources. These qualities demand a comprehensive (or at least

sufficient) exploration of the model's behaviour.

- 1.3

- To complicate matters further, an ABM is typically a stochastic

process and thus requires Monte Carlo sampling, in which

each experiment (or parameter setting) is multiply performed using

distinct pseudo-random sequences (i.e., different random seeds) in

order to achieve the statistical robustness necessary for testing

hypotheses and distinguishing multiple scenarios under varying

experimental or parameter settings. By "random seed", we mean the seed

for the random number generator. Thus, an ABM delivers a high volume of

output data rendering the identification of salient and relevant

results (such as trends) and the assessment of model sensitivities to

varying experimental conditions a challenging problem.

- 1.4

- All these complications apply not only to the analysis of

ABM output data but also to the model's design and implementation. The

massive diversity in outputs, often exhibiting temporal and spatial

dimensions, necessitates judicious model design, planning, and

application. Poor or unstructured design may lead to unnecessarily

larger outputs to reach the same (or even less) precise conclusions

that one may infer from outputs of well-designed models and

experimentation. The standards employed in the ABM field, such as ODD

(Overview, Design concepts, and Details) (Grimm

et al. 2006, 2010;

Müller et al. 2013)

and DOE (design of experiments) (Fisher

1971), have significantly improved transparency,

replicability, and trust in ABM results. However, the field continues

to lack specific guidance on effective presentation and analysis of ABM

output data, perhaps due to this issue's having less priority in ABM

social science research or due to technical barriers. Furthermore,

converging on universal standards remains elusive partly due to the

broad spectrum of research fields employing ABMs. Domain-relevant

metrics, analytical techniques, and communication styles are largely

driven by each discipline's target audience.

- 1.5

- Yet, there are common methodological challenges facing ABM

modellers in their path toward understanding, refining, and distilling

the most relevant and interesting results from a nearly-endless sea of

output data. While a modeller invests a significant amount of time and

effort in the development of an ABM itself, a comprehensive or

compelling analysis of the ABM output data is not always considered as

deserving the same resource-intensive attention. Proper output analysis

and presentation are vital for developing a domain-specific message

containing innovative contributions.

- 1.6

- This paper aims to provide an overview of the

state-of-the-art in how agent-based modellers contend with their model

outputs, their statistical analysis, and visualization techniques.[1] We discuss challenges

and offer examples for addressing them. The first topic deals with

several issues surrounding the study of variance in the model outputs

(i.e., stability) and its impact on both model design (e.g., simulation

runs or "samples") and analysis (e.g., hypothesis testing). The next

one addresses the state of sensitivity analysis and the complexities

inherent in the exploration of the space that encapsulates both the

parameters and the outcomes. The third topic focuses on the analysis

and presentation of spatial (including geospatial) and temporal results

from ABMs.

- 1.7

- We also survey the role of effective visualization as a medium for both analysis and exposition of model dynamics. Comments on visualization appear within each main topic as visualization strategies tend to be strongly defined and constrained by the topic matter. Finally, we outline outstanding issues and potential solutions which are deemed as future work for ourselves and other researchers.

Determining

Minimum Simulation Runs and Issues of Hypothesis Testing

Determining

Minimum Simulation Runs and Issues of Hypothesis Testing

- 2.1

- ABM researchers strive to expose important and relevant

elements in their models' outputs and consequently the underlying

complex dynamics in both quantitative and qualitative ways. Compelling

statements about an ABM's behaviours may be drawn from descriptive

statistics of distinct outcomes (e.g., mean and standard deviation) or

statistical tests in which outcomes are compared (e.g., t-test),

predicted (in the statistical sense, e.g., multiple regression), or

classified (e.g., clustering or principal component analysis). Given the

stochastic nature of most ABMs, these analytical exercises require an

outcome pool drawn from a sufficient number of samples (i.e.,

simulation runs).

- 2.2

- The quantity of ABM output samples has several

ramifications to experimental design and the quality of the analysis.

For those large and complex ABMs whose longer run times prohibit the production

of large samples, the relevant question is the minimum number of

required runs. Conversely, expedient ABMs offer the temptation of

producing far greater sample counts thereby increasing the sensitivity

of statistical tests possibly to the point of absurdity. That is, one

might produce so many samples such that traditional tests expose

extremely small and contextually inconsequential differences. We focus

our discussion on the methods for the determining the number of minimum

runs. Implications of having too many samples are discussed in Appendix

B.

Minimum Sample Size (Number of Runs)

- 2.3

- The determination of the minimum sample size partly relies

on the analytical objective. One common objective is a statistical

description of the outcomes typically in the form of means and standard

deviations (or alternatively, variances). Since the shape of a

model's output distributions are usually a priori unknown,

the point or sample size at which outcome mean and variance reaches

relative quiescence or stability is crucial to accurate reporting of

the descriptive statistics. Otherwise, the statistics would harbour too

much uncertainty to be reliable. Unfortunately, all of the different

criteria for determining this point of stability suffers from some

degree of subjectivity, and thus it falls on the analyst to wisely make

the selection. Furthermore, these approaches appear to remain either

underused or unknown to many ABM researchers (Hamill

2010). In fact, a survey of some of ABM literature reveals

sample sizes to be too low, conveniently selected (sample sizes of 100

or less are common), or exorbitantly high (Angus

& Hassani-Mahmooei 2015).

Variance Stability

- 2.4

- Assessing variance stability requires a metric to measure

the uncertainty surrounding the variance (or the variance of variance

if you will). Law and Kelton (2007)

and Lorscheid et al. (2012)

offer such metrics both of which rely on some functional ratio between

the variance and the sample mean. Law & Kelton's approach seeks

a sample size in which the variability remains within some predefined

proportion of the confidence interval around the mean (confidence

interval bound variance), thus it is bound to the

assumptions of normality, namely that the mean has a Gaussian

distribution. Hence, the researcher must select this proportion from

outputs of a trial set of runs. Lorscheid et al.'s method eschews those

assumptions by employing the coefficient of

variation and a fixed epsilon

(\(E\)) limit of that metric. While these are dimensionless metrics,

their use is problematic for simulation studies in which multivariate

outcomes from parameter effects are analyzed.

- 2.5

- The coefficient of variation is simply the ratio of the

standard deviation of a sample (\(\sigma\)) to its mean (\(\mu\)):

$$ c_V^{\phantom{x}}=\frac{\sigma}{\mu} $$ - 2.6

- This scaling offers a similar interpretation as the

confidence interval (C.I.): \(c_V^{\phantom{x}} \rightarrow 0\) is

equivalent to \(t(0 \notin \mathrm{C.I.}) \rightarrow \infty\) and the

\(p\)-value approaches 0. That is, when the standard deviation shrinks

relative to the mean, the probability of the confidence interval

spanning across the value of 0 drops precipitously. The coefficient of

variation (\(c_V^{\phantom{x}}\)) will exhibit substantial variance for

small sample sizes just like the standard error of the mean. For

example, the \(c_V^{\phantom{x}}\) of a single ABM outcome obtained

from a set of five runs will vary more with the same metrics taken from

other sets of five runs than if each set contained far more runs, say

100. Lorscheid et al. compare the \(c_V^{\phantom{x}}\)'s of

differently sized sets of runs (e.g., the \(c_V^{\phantom{x}}\) from 10

runs, then 100, 500, and so forth). The sample size at which the

difference between consecutive \(c_V^{\phantom{x}}\)'s falls below a

criterion, \(E\), and remains so is considered a minimum sample size or

minimum number of ABM runs. For example, if an outcome drawn from runs

of different sample sizes, \(n \in\{10, 500, 1000, 5000, 10000\}\),

yields the \(c_V^{\phantom{x}}\)'s (rounded to 1/100) \(\{0.42, 0.28,

0.21, 0.21, 0.21\}\) and we select \(E = 0.01\), we would consider the

third sample size (\(n = 1000\)) as the point of stability. These

\(c_V^{\phantom{x}}\) stability points are obtained for all ABM

outcomes of interest (in a multivariate setting), and thus the minimum

number of runs for the ABM is the maximum of these points:

$$ n_{\min} = {\mathop{\rm argmax}\limits}_{n} | c_V^{x,n} - c_V^{x,m}| < E,~\forall x~\mathrm{and}~\forall m>n $$ where \(n_{\min}\) is the estimated minimum sample size; \(x\) is a distinct outcome of interest; and \(m\) is some sample size \(> n\) for which the \(c_V^{\phantom{x}}\) (of each outcome) is measured.

- 2.7

- However, the fixed \(E\) favors those \(\mu\) sufficiently

larger than 0 and also larger than its corresponding \(\sigma\) and

penalizes \(n_{\min}\) for outcomes with \(\mu\) closer to 0. That is,

the more likely an outcome's confidence interval encompasses 0, the

erroneously larger the estimation of \(n_{\min}\) will be. Conversely,

the fixed \(E\) renders the procedure too effective and results in an

underestimation of \(n_{\min}\) for those C.I.'s that reside far from 0,

relative to \(\sigma\). Therefore, we urge some caution in using

\(c_V^{\phantom{x}}\) to determine the minimum sample size and applying

it only to ABMs for which the outcomes of interest are prejudiced

against attaining a value of 0. As such, Lorscheid et al.'s approach

determines a minimum sample size not just based on variance stability

but also the likelihood an outcome's confidence interval contains 0.

- 2.8

- Alternatively, one might consider just variance stability

without any consideration of the mean value. Following Lorscheid et

al.'s strategy of assessing stability from metrics on an outcome for a

sequence of sample sizes, we track the windowed

variance of simple outcomes from several canonical

statistical distributions as well as an ABM model. The distributions we

employ here for demonstration purposes are the normal (or Gaussian),

uniform, exponential, Poisson, \(\chi^2\), and t

distributions.[2]

While ABM outputs do not always conform to one of these parametric

distributions, the ones we examine here are distinct enough to provide

us with a sense of variance stability for a spectrum of distributions.

These distributions will serve as proxies for ABMs' outcomes and, for

our purposes, have been parameterized so that their theoretical

variance \(\sigma^2\approx 1\). We also include an outcome from a

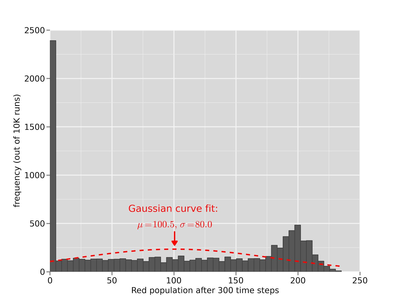

simple ABM model of Birth Rates (Wilensky

1997). This ABM entails two dynamically changing

populations (labelled "red" and "blue"). Here, our outcome is the size

of the "red" population. For further details, see Figure 17 in

Appendix C.

- 2.9

- In Figure 1a, we

offer variances for varying sized samples of Gaussian variates (i.e.,

scalars drawn from the Gaussian distribution parameterized to have a

variance of 1). At low sample sizes, there is considerable variance

surrounding the sample variance itself as evidenced by the "noisiness"

of the variance from one sample size to the next. After a certain point

(roughly \(n = 400\)), this outer variance

appears to stabilize and continues to further converge to 0 albeit

slowly.

- 2.10

- The outer variance at each sample size can then be measured

using the variance of proximal sample sizes. For example, the outer

variance for \(n = 10\) is calculated from variance of the sample

variances of \(n\in\{10,\ldots, 10+(W-1)g\}\), where \(W\) is some

predetermined size of the window and \(g\) is our sample size

increment; we select \(g=W=10\), and we so consider

\(n\in\{10,20,\ldots,100\}\) for the variance surrounding \(n=10\).

Notationally, this outer variance may be expressed as \(\sigma^2\)

(\(s^2\)) but given its estimation using windows, we assign it

\(\omega_n^2\), where \(n\) is the sample size. In Figure 1, we chart the \(\omega_n^2\)

relative to its maximum value (\(\frac{\omega_n^2}{\max \omega^2}\))

for each distribution.

Figure 1. Sample Size vs. Variance Stability. The colours in the right plot denote the distribution: normal, uniform, exponential, Poisson, \(\chi^2\), Student's t, and Birth Rate ABM. - 2.11

- The colours distinguish the seven distributions. The grey,

dashed horizontal line expresses our semi-subjective criterion (of

0.20) under which the relative \(\omega_n^2\) must reside in order for

\(n\) to be deemed a "minimum sample size". The speed at which this

condition is met varies considerably among the distributions,

highlighting the need to forego distributional assumptions regarding

ones ABM outputs in variance-based minimum sample size determination,

unless the distributional shapes are well-identified. This approach for

assessing variance stability bears two elements of further

subjectivity. Firstly, the point of stability may be identified by

either the first \(n\) at which the criterion condition is met or the

first \(n\) at which all further sample sizes meet the condition. The

red and green vertical lines in Figure 1a respectively denote these

points of stability for the Gaussian distribution. Secondly, the outer

variance could have been assessed using a larger pool of variates at

each \(n\) rather than estimated under windows. However, in keeping

with the strategy of minimizing the number of test simulation runs

\(n_{\min}\), we report the findings of window-based \(\omega^2\)

rather than \(\sigma^2\) (\(s^2\)).

Effect Size

- 2.12

- In traditional statistical analysis, the common approach

for determining minimum sample size requires one to first select the

size of a detectable effect (i.e., a statistic such as the mean or

difference of means scaled by a pooled standard deviation). This

approach also requires a selection of acceptable levels of the type

I and type II

errors. A type I error is coarsely the probability (in the frequentist

sense) that the null hypothesis is rejected when in fact it is true.

The type II error is the converse: the likelihood that the null

hypothesis is retained when the alternative hypothesis is true. For

example, one might compare the means of two ABM outcomes each from

separate sets of sample of runs. These outcomes may be borne of distinct

model parameterizations (a necessary though not sufficient condition

for yielding a true difference) and measured to be significantly

different under a rudimentary t-test.

However, a small sample size will penalize the test which may not

report a statistically significant difference between means of those

outcomes: a type II error. Alternatively, these outcomes may arise from

identical parameterizations yet the t-test

erroneously reveals a significant difference: a type I error. The rates

of type I and II errors are expressed as \(\alpha\) and \(\beta\). The

converse of the type II error (\(1-\beta\)) is called the "power"

level.

- 2.13

- The minimum sample size \(n_{\min}\) can then be computed

as

$$ n_{\min} \geq 2 \frac{s^2}{\delta} (t_{V,1-\alpha/2} + t_{V,1-\beta/2})^2 $$ where \(s\) is the standard deviation of the outcome or the pooled standard deviation of two outcomes, \(\delta\) is lower bound on the absolute difference in means that is to be classified as significantly different; \(t\) is the t-statistic (or the quantile function of the t distribution); \(\nu\) is the degrees of freedom (here \(n_{\min} - 1\)), and \(\alpha\) and \(\beta\) are the levels of type I and II errors respectively. In lay terms, the minimum sample size occurs at the point at which both type I and type II errors occur at the desired critical levels as determined by the t-test, hence the employment of the t distribution. This approach has been suggested in the ABM literature (e.g., Radax & Rengs 2010). As \(n_{\min}\) appears on both sides of the equation, trial-and-error or algorithmic iterations can usually converge on \(n_{\min}\). A similar equation is employed when the outcome is a proportion \(\in [0, 1]\). The non-central t distribution can also be used for sample size determination (as in the R statistical package).

- 2.14

- A close inspection of this approach reveals test

sensitivity to the outcome distribution's departure from the

normal. In Table 1, we

empirically measure the power level (and hence the level of type II

error) by drawing pairs of variate sets from some typical distributions

and the Birth Rate model, parameterized such that identical effect sizes

of 0.5.[3]

Thus, an insignificant t-test comparison for a pair

of variate sets is tantamount to a type II error. For each distribution

type and sample size \(n\), the comparison was performed for 5000 pairs

of variate sets each of size \(n\). Each set pair was compared, and we

monitor the proportion of pairs of these sets that yielded a

significant \(p\)-value: an empirically-derived power level. The

empirical power levels for a range of sample sizes \(n\) are

graphically shown in Figures 15

and 16 in Appendix A.

Table 1: Minimum Sample Sizes for Outcome Distributions. \(n_t\), \(n_e\), and \(n_W^{\phantom{x}}\) are the theoretically-derived, empirically-derived, and Wilcox-test-based minimum sample sizes, \(n_{\min}\). Distr. \(n_t\) \(n_e\) \(n_W^{\phantom{x}}\) \(n_e-n_t\) \(n_W^{\phantom{x}}-n_t\) Normal 64 65 68 1 4 Exponential 64 59 78 \(-5\) 14 Poisson 65 61 64 \(-4\) \(-1\) Birth Rate 64 65 70 1 6 Birth Rate (\(d= 1.0\)) 18 19 26 1 8 - 2.15

- We observe incongruities between the theoretical

\(n_{\min}\) (\(n_t\)) and the empirically-derived \(n_e\). In fact,

the power calculation overestimates \(n_{\min}\) for the skewed

distributions (i.e., the exponential and the Poisson). While the

differences in these \(n_{\min}\) are relatively minor, they could have

a material benefit for large scale ABMs for which each run is costly.

However, in these cases, using the t- test for

exposing a predetermined effect size has to be deemed appropriate. For

these surveyed distribution types, the empirical distributions of the

means themselves frequently pass the Shapiro test of normality hence

allowing for the use of the t-test.

- 2.16

- Given the sensitivity of the traditional t-test

to distributional skewness as well as the uncertainty of the

distributional shape in ABM outcomes, one might turn to a more

conservative test, the Wilcoxon ranked sum test

(also known as the Mann-Whitney test);

the \(n_W\) column reports this test's suggested minimum

sample sizes. Interestingly, the more efficient Wilcoxon test appears

to propose a lower minimum size for the Poisson distribution.

- 2.17

- When we assess the efficiency of calculating \(n_{\min}\)

for the Birth Rate ABM, we find that, despite the flatness plus

bimodality of the outcome distribution, the calculation of \(n_{\min}\)

is almost accurate, and the Wilcox test is modestly conservative

compared to the exponential distribution.

Multivariate Stability

- 2.18

- Since most ABMs typically produce multiple outcomes, the

calculation of the required sample size would have to consider all

outcomes of interest. Furthermore, analysis of ABMs often entails an

exploration of parameter settings (or the parameter space) in order to

understand the dependencies between key input parameters and their

outcomes including their variability. Given the complexity of ABMs, the

outcomes' variance may or may not be constant (i.e., homoskedastic)

across the parameter settings. Hence, the task of understanding model

output sensitivity to different experimental conditions that are

relevant to the research question is vital. While the topic of

sensitivity analysis is further elaborated in 3.1, we discuss it here

briefly given its role in determining the adequate run sample size

applicable to all the chosen parameter settings. Well-structured DOE

(described further in Section 3)

can be very helpful to comprehensively explore model variabilities

corresponding to multiple model parameters.

Visualization for statistical issues

- 2.19

- One visualization approach for examining univariate ABM

outcome distributions is the violin plot

which combines elements of a box plot and a kernel density plot, with a

smoothed estimation of outcomes' variances across the ranges of

factors/parameters (see, e.g., Kahl

& Hansen 2015). Figure 2

illustrates the multifaceted influence of six variables (each with 3

values following a \(3^k\) factorial design) on the output measure

("compensation payment"). Details may be found in Lorscheid (2014).

Figure 2. Violin plots for a univariate analysis of potential control variables. Source: Lorscheid (2014) - 2.20

- The grey area indicates the dispersion of values. The white dot in the plot indicates the median of the data set. The black line above the median is the area of the second quartile, and the black line below the median is the third quartile of the data set. For a detailed description of violin plots see Hintze and Nelson (1998).

Solution space exploration

and sensitivity analysis

Solution space exploration

and sensitivity analysis

- 3.1

- There are a variety of topics that may be gathered under

the broad heading of parameter or input-output space exploration,

including optimization, calibration, uncertainty analysis, sensitivity

analysis, as well as the search for specific qualitative model features

such as "regime shifts" and "tipping points". In all cases, the goal is

to provide additional insight into the behaviour of the ABM through the

examination of certain parameter settings and their corresponding

output measures.

- 3.2

- We will first discuss "exploration" in a broad sense,

followed by a more detailed discussion of a variety of methods for

performing one particularly important exploration task: sensitivity

analysis.

Input/Output Solution Space Exploration

- 3.3

- One of the most common forms of parameter space exploration

is manual (or human-guided)

exploration (also called the

"trial-and-error method" or "educated guessing"). This approach can be

computationally efficient if guided by an individual familiar with the

model's dynamics and/or outputs. The intuitions of a model's

author/developer, a domain expert, or a stakeholder can inform

parsimonious, iterative parameter selection in such a way as to

generate and test relevant hypotheses and minimize the number of

regions searched or the number of required simulation runs. However,

this exploration strategy is vulnerable to human bias and fatigue

leading to a disproportionate amount of attention paid to the target

phenomena and the neglect of large portions of the model's behaviour

space. Distributing the burden of the exploration task (e.g., crowd

sourcing) may address some of its limitations. Nevertheless, more

systematic, automated, and unbiased approaches are required to

complement the shortcomings of solely human-guided searching, which

will always play a role in space exploration, especially in its

preliminary stages.

- 3.4

- The simplest of the systematic exploration approaches fall

under the class of regular sampling techniques

in which the parameters are chosen (or sampled) in a systematic manner

to ensure their having certain statistical or structural properties.

Some of these techniques are random, quasirandom,

gridded/factorial, Latin hypercube,

and sphere-packing. Sampling may globally consider

the entire parameter space or focus locally on a particular region

(e.g., altering one parameter at a time for "univariate sensitivity

analysis"). The well-established methodological history of DOE and the

recent literature on design and analysis of simulation experiments

(e.g., Sacks et al. 1989)

can guide the sampling strategy. However, the complexity of ABMs (and

their outputs) can render classic DOE inappropriate as noted by Sanchez

and Lucas (2002). Classic

DOE (a) assumes only linear or low-order interactions among

experimental parameters (or factors) and outputs, (b) makes little or

no provision for the iterative parameter selection process (i.e.,

sequential virtual experiments), and (c) also assumes typical error

(Gaussian and unimodal) in the output. An example of multi-modal ABM

output is presented in Appendix C. Thus, traditional DOE methods, while

useful for ABMs, ought to be implemented with caution and consideration

for appropriate methods that address complexities in the output.

Research into DOE methods for ABMs is still evolving (Klein et al. 2005; Kleijnen et al. 2005; Ankenman et al. 2008; Lorscheid et al. 2012).

- 3.5

- Research into more sophisticated exploration techniques has

been informed by meta-heuristic searching,

optimization algorithms, and machine

learning. Researchers propose the use of genetic

algorithms (GA) (Holland 1975)

for a wide range of exploration tasks including directed searches for

parameters that yield specific emergent behaviours (Stonedahl & Wilensky 2011),

parameter optimization (Stonedahl

et al. 2010), calibration/parameter estimation (Calvez & Hutzler 2006; Heppenstall et al. 2007; Stonedahl & Rand 2014)

and sensitivity analysis (Stonedahl

& Wilensky 2010). The query-based

model exploration (QBME) paradigm provided by

Stonedahl and Wilensky (2011)

expands Miller's (1998)

application of GA in ABM output exploration. In QBME, parameters

producing user-specified model behaviours are discovered through

automation such as GAs, thus inverting the traditional workflow (see

Figure 3). Stonedahl and

Wilensky (2011)

demonstrate QBME for identifying convergence, divergence, temporal

volatility, and geometric formations in models of collective animal

motion (i.e., flocking/swarming).

Figure 3. Query-Based Model Exploration (QBME). This framework for exploring ABM parameter-spaces exploits genetic algorithms (or other meta-heuristic search algorithms) to efficiently search for parameters that yield a desired model behaviour. Source: Stonedahl and Wilensky (2011) Sensitivity analysis: Approaches and challenges

- 3.6

- Sensitivity analysis

(SA) is a variation of parameter/input-output space exploration that

focuses on model response to changes in the input parameters (Figure 4). Specifically, the researcher

seeks to identify parameters for which small variations most impact the

model's output.

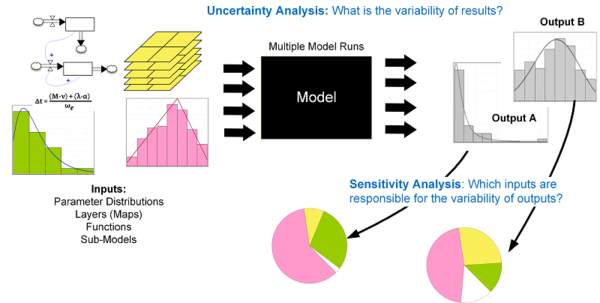

Figure 4. Uncertainty and sensitivity analysis as part of the modelling process. Source: Ligmann-Zielinska et al. (2014) - 3.7

- This discovery can aid in prioritizing prospective data

collection leading to improved model accuracy, reduction of output

variance, and model simplification (Ligmann-Zielinska

et al. 2014). Model insensitive parameters may even be

relegated to mere numerical constants thereby reducing the

dimensionality of the input parameter space and promoting model

parsimony; this simplification process is referred to as "factor

fixing" (Saltelli et al. 2008).

Ignoring these non-influential input parameters can have ill-effects on

the model by increasing its computational cost and also on its

reception when these parameters are controversial for stakeholders not

involved with the model's development (Saltelli

et al. 2008).

- 3.8

- The proliferation of various SA methods stems from the

variety of ABM styles and research problems ABMs address as well as

from the availability of increasing computational capacity (Hamby 1994; Saltelli et al. 2000).

Currently, the ABM practice of SA has entailed one or more of the

following methods: one-parameter-at-a-time, elementary

effects, standardized regression coefficients,

meta-modelling, and variance-based

decomposition (Thiele et

al. 2014; ten Broeke et al.

2014). Below, we briefly discuss each of these, their

advantages, and drawbacks, in the context of ABMs.

- 3.9

- In one-parameter-at-a-time

(OAT), each input parameter in turn is examined over a set of values

(defined either ex ante to the SA or dynamically

during SA) and in isolation by holding the other parameters at a

constant baseline. Meanwhile, the effects of these marginal (i.e.,

one-at-at-time) parameter changes are monitored, and repeated

iterations increase the procedure's robustness. Hassani-Mahmooei and

Parris (2013),

for example, applied OAT to their ABM of micro-level resource conflicts

to identify preferable initial conditions and to evaluate the influence

of stochasticity on the model; the similarity of outcomes within a

threshold demonstrated the model's insensitivity to randomness. OAT's

simplicity while attractive also exposes its limitations in ABM SA (Ligmann- Zielinska 2013). For

one, the impactful and relevant values for each input parameter may be a

priori unknown thus rendering any prioritization of

parameters difficult and the search for key parametric drivers

inefficient. Also, the marginal nature of the parameter search space

surrounding the baseline obscures parameter interactions and severely

shrinks the input hypercube with larger parameter sets. For example,

with as few as 10 input parameters, OAT covers only 0.25% of the input

space (for a geometric proof, see Saltelli and Annoni (2010)).

- 3.10

- Elementary effects

(EE) expands on OAT by relinquishing the strict baseline. That is, a

change to an input parameter is maintained when examining a change to

the next input rather than resuming the baseline value (as done in

OAT). Passing over the parameter set is multiply repeated while

randomly selecting the initial parameter settings. These perturbations

in the entire parameter space classify EE as global SA

(Saltelli et al. 2008).

Originally proposed by Morris (1991)

and improved by Campolongo et al. (2000),

EE is suited for computationally expensive models having large input

sets and can screen for non-influential parameters.

- 3.11

- Global SA may also be performed through estimation of standardized

regression coefficients (SRC), which in its basic

form succinctly measures the main effects of the input parameters

provided the relationships between the parameters and the outcomes are

primarily linear. A standardized regression coefficient

expresses the magnitude and significance of these relationships as well

as the explained variance. More precisely, the square of the

coefficient is the variance explained.

- 3.12

- One glaring limitation of SRC is its ability handle spatial

inputs (of spatial ABMs) unless a small set of scalars or indices can

sufficiently serve as proxies for entire maps (Lilburne & Tarantola 2009).

Also, SRC can expose lower-order effects but not complex

interdependencies (Happe et al. 2006).

- 3.13

- Meta-modelling (or

emulation) can address the low-order limitations of SRC. A meta-model

(emulator, a model of a model) is the surrogate representation of the

more complex model (like ABM) created in order to reduce the

computational time of the simulations necessary for SA. For example, Happe et al. 2006 collated

model responses from a \(2^k\) factorial design on a relatively small

set of parameters and fitted a second-order regression. Meta-modelling

can be computationally efficient and not necessarily require large

amounts of data. For even higher order effects, meta-modelling methods

such as Gaussian process emulators are required (Marrel et al. 2011).

- 3.14

- Variance-based SA

(VBSA) is considered the most prudent approach for evaluating model

sensitivities as it does not assume linearity (Ligmann-Zielinska &

Sun 2010; Fonoberova

et al. 2013; Tang &

Jia 2014). In VBSA, the total variance of a given output is

decomposed and apportioned to the input parameters including their

interactions (Saltelli et al. 2000;

Lilburne & Tarantola

2009). Two indices per input (\(i\)) are drawn from the

partial variances: a first-order (main effects) index \(S_i\) and a

total effects index \(ST_i\). \(S_i\) is the ratio of \(i\)'s partial

variance to the total variance. \(ST_i\) is the sum of all non-\(i\)

indices (\(\sum S_{-i}\)) and captures interactions between \(i\) and

the other inputs (Homma &

Saltelli 1996). \(S_i\) alone is sufficient for decomposing

additive models, so VBSA is unnecessary for models known to produce

largely linear output as calculation of the index pairs is

computationally expensive requiring large sample sizes (Ligmann-Zielinska &

Sun 2010); for example, VBSA for \(k\) parameters requires

\(M\) (\(k+1\)) model runs where \(M>1000\). This computational

load may be reduced through parameter (or factor) grouping (Ligmann-Zielinska 2013;

Ligmann-Zielinska et

al. 2014), parallelization (Tang

& Jia 2014), or quasi-random sampling (Tarantola & Zeitz 2012).

Situations in which inputs are exogenously correlated may result in

non-unique VBSA (Mara &

Tarantola 2012). While recent methods addressing these

dependencies have been developed (Kucherenko

et al. 2012; Mara &

Tarantola 2012; Zuniga et

al. 2013), they still require elaborate DOE coupled with a

very large sample size and algorithmic complexity.

- 3.15

- Genetic algorithms (GAs)

may also be used for SA (Stonedahl

& Wilensky 2010). Parameters are altered under the

genetic paradigm of reproduction in which pairs of "fitter" parameter

sets exchange subsets. The fitness (or objective) function may be

tailored to expose model sensitivities to its parameters (as opposed to

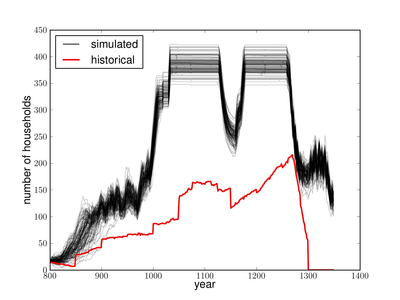

calibration). For example, Stonedahl and Wilensky (2010) allowed GAs to search

through 12 parameters of the "Artificial Anasazi" ABM (Dean et al. 2000; Janssen 2009) in order to

induce responses departing far from the empirical, historical values,

while constraining the search to a limited range (\(\pm\)10% of their

calibrated settings). See Figure 18

in Appendix D for a plot of the outlier results.

- 3.16

- The reliance on mean and variance for distributional

information in many of the practiced methods of SA is insufficient for

more complex distributions. Future research should investigate

moment-independent methods (Borgonovo

2007; Baucells

& Borgonovo 2013).

Visualization in sensitivity analysis

- 3.17

- Visualizations of the input parameter-output relationships

are an integral part of SA. In scatter-plots,

these relationships are directly and simply plotted potentially

revealing dependencies (see Figure 5).

We used the simple Schelling segregation model (Schelling 1969) of red and

green agents on a 100x100 grid implemented in Agent Analyst (http://resources.arcgis.com/en/help/agent-analyst/).

The model contains four uniform parameters (lower and upper bounds in

parentheses): number of agents (3000, 6000; discrete), tolerance to

agents of different colour (0.2, 0.5), random seed (1, 10000; discrete),

and percent of green agents (10, 50). We measured agent migration

(total number of agent moves during model execution) for \(N = 1280\)

model runs.

Figure 5. Sensitivity analysis with scatterplots. Scatterplots of total migration versus the four model inputs at \(t = 100\); note that number of agents has more influence on the variability of total agent migration than the other inputs. - 3.18

- Typically, the \(y\)-axis and \(x\)-axis (for a

two-dimensional scatterplot) express values for an output and an input

parameter, respectively. Scatterplots are especially useful when the

dependencies are structured, the output is a scalar, and the number of

model parameters is limited allowing for an unencumbered enumeration of

parameters and output combinations in separate scatterplots. More

complex model behaviours require alternative visualization styles such

as pie charts to display

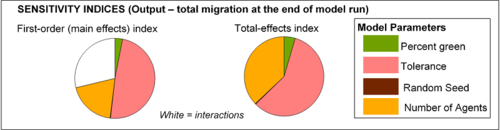

variance partitions (see Figure 6).

Figure 6. Sensitivity indices obtained from decomposition of migration variance at \(t = 100\). Note that almost 30% of migration variance is caused by interactions among inputs – mainly tolerance and number of agents. - 3.19

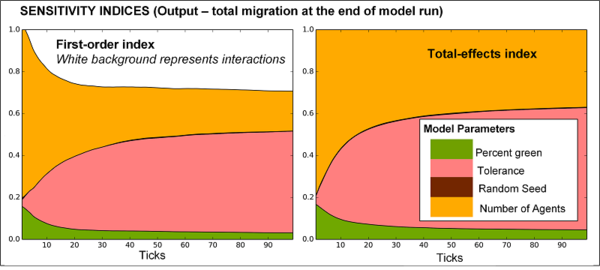

- A snapshot of variance decomposition at

the end of the model run (e.g., Figure 6)

may be insufficient in assessing the importance of parameters and

consequently their prioritization (Ligmann-Zielinska

& Sun 2010). Thus, visualizing variance decomposition

temporally will reveal parameter stability over the course of the model

run (see Figure 7). Spatial

outputs such as land use change maps may also receive similar treatment

to reveal the extent of outcome uncertainty in regions (or clusters)

due to specific parameters (Ligmann-Zielinska

2013).

Figure 7. Time series of sensitivity indices obtained from decomposition of migration variance measured over time. The example demonstrates that parameter sensitivities can considerably vary during simulation, with number of agents dominating the variance at \(t = 10\), and tolerance dominating the variance at \(t = 100\). - 3.20

- SA for multiple outcome variables in an ABM incurs additional challenges due to differences in each parameter's impact on the outcomes (see Figure 4). This issue is of particular concern for model simplification and demands either one single, well-chosen outcome that is adequately representative of the model's behaviour or more conservatively, the difficult task of undergoing SA across the whole spectrum of outputs: scalars, time- and space-dependent measures.

Spatio-Temporal

Dynamics

Spatio-Temporal

Dynamics

-

Temporal and spatial dimensions of ABM output data: challenges

- 4.1

- As a stochastic process, an ABM generates (or is capable of

generating) time series (TS) data. To a slightly

lesser extent, ABMs also operate within topological boundaries often

expressed as a spatial landscape, whether empirical (e.g., Parker et al. 2002; Bousquet & Le Page 2004;

Heppenstall et al. 2012;

Filatova 2014) or

stylized (e.g., early use by Schelling

1969, 1978; Epstein & Axtell 1996).

Similar to other ABM outcomes, both the TS and spatial data are borne

out of complex endogenous dynamics over which the modeller exerts full

control. Thus, it is rarely the case that the output data is produced

by a single component of the model. Instead, most of ABM TS and spatial

output recorded embody the long list of unique features that ABMs

exhibit such as emergence rather than aggregation at the macro-level,

interaction rather than reaction at the meso-level, and non-linearity

rather than linearity of processes and decision-making at the

micro-level. Spatial maps generated by ABMs may also capture eventual

spatial externalities, path-dependencies, and temporal lag effects.

These characteristics render the analysis of ABM outputs less

appropriate for more traditional tools. These issues derive from the

reasons ABM are used in the first place.[4]

We consider this challenging nature of TS and spatial output analysis

in the following sections.

Time: approaches and visualization techniques

Time series generated by ABMs (ABM TS) represent a myriad of temporal outcomes, such as evolving agent characteristics (e.g., sociodemographics, utility, opinions); agent behaviours/decisions (e.g., strategies, movements, transformations); or measures descriptive of the model state (e.g., agent population or subpopulation counts). All these are often presented as simple line graphs representing 1) outcomes of individual agents of special interest (Squazzoni & Boero 2002, Fig. 9) or 2) aggregated statistics (such as mean or median) over the entire agent population, subgroups, or an individual (with measurements over several runs) under varying levels of temporal granularity (e.g., a moving window covering several time points) (Izquierdo et al. 2008). Comparisons of multiple TS are often facilitated by the inclusion of confidence intervals (Raczynski 2004, Fig. 4) and occasionally performed against experimental/empirical (Richiardi et al. 2006; Boero et al. 2010, Fig. 1) or theoretical (Takahashi & Terano 2003, Fig. 4) outcomes or expectations (Angus & Hassani-Mahmooei 2015). TS comparisons have also been performed for calibration purposes (Richiardi et al. 2006). - 4.2

- Despite the proliferation of ABMs, effective TS analytical

techniques remain under-used (Grazzini

& Richiardi 2015). Angus and Hassani-Mahmooei (2015) surveyed over 100 ABM

publications in JASSS and found very few instances

of additional

(statistical) modelling of TS data. Only in agent-based financial market

research does one find relevant TS statistical and econometric analysis

(Yamada & Terano 2009;

Chen et al. 2012; Neri 2012).

- 4.3

- In this section, we discuss elements of TS analysis in the

context of ABMs and present some basic and some compelling examples,

while stopping short of expounding formal TS modelling such as

auto-regressive models.

- 4.4

- Among the techniques for analysing ABM TS, we consider time

series decomposition to be one of the more useful

methods. Decomposition entails partitioning a TS into four components:

trend, cyclical, seasonal, and random components. The prominent trend

is the most structured of these components and depicts

the long-term linear or non-linear change in the TS data. The seasonal

component exhibits regular periodicity due to some fixed external cycle

such as seasons, months, weeks, or days of the year. Exogenous model

events such as regular additions of a fixed count of agents to the

agent pool are also considered seasonal. Cycles having irregular

periodicity constitute the cyclical component.

Finally, the residual or random component captures

the unexplained variation remaining after the prior three components

are filtered from the TS.

- 4.5

- The spectrum of TS analysis techniques runs from the

calculation of moving averages

and linear filtering (see

Figures 8a and 8b) to the more

sophisticated exponential smoothing

and autoregressive modelling.

Furthermore, ABM relevant ergodicity tests may be applied to infer

stationarity of statistical moments (e.g., mean, variance, skewness,

kurtosis, etc.) hence equilibrium of those moments across a pool of

simulation runs (Grazzini 2012).

- 4.6

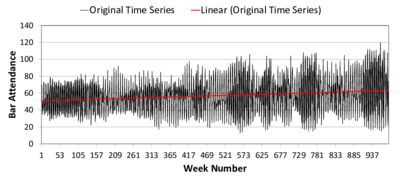

- For an example of ABM TS analysis, we examine the output of

the well-known El-Farol "bar patron" game-theoretic ABM (Rand &

Wilensky 2007). The initial conditions are: memory size = 5, number of

strategies = 10, overcrowding threshold = 50. The number agents

increases by 2% every 52 steps. Figure 8a

presents the original data and its linear increasing trend (in red).

Subtle structure in the TS is exposed when we plot a moving average

along with its decomposed trend (obtained via exponential smoothing)

(Figure 8b).

(a) Linear trend (b) Moving average

(a) Linear trend (b) Moving average Figure 8. Time Series of Bar Attendance in El Farol ABM. The black and grey series represent the original data; the red line is the linear fit (left) or decomposed trend (right); and the blue line is a moving average. The upper subfigures are labelled (a) and (b). The lower subfigures are (c) and (d). - 4.7

- The model behaviour is augmented to include an arbitrary,

one-time increase in population increase while the gradual increase is

reduced to 0%. While the linear trend for the new results (Figure 8c) coarsely captures the population

increase, the sudden change is made starkly visible using a moving

average (plus decomposed trend) (Figure 8d).

- 4.8

- Comparisons between TS drawn from distinct model

parameterizations can be easily performed through direct (albeit naïve)

visual comparison of the two series, plotting the differences, or

calculating their Euclidean distance[5] or cross

correlation. However, these comparison approaches are applied

to exact temporal pairwise data and thus fail to account for the

complexities of ABMs that may produce TS that are dissimilar only

through interspersed lags. In addition to Richiardi's (2012) suggestions for robust

TS comparison, we advocate the use of dynamic time

warping (DTW) to address the above complication (Keogh & Ratanamahatana 2005).

DTW is now extensively used in areas such as motion and speech

recognition. Using a stylized pair of TS, Figure 9

depicts the effectiveness of DTW, which identifies comparable pairs of

data occurring at differing time scales.

Figure 9. Comparing distance (similarity) measurement methods between Euclidean Distance (left) and DTW (right) - 4.9

- To further demonstrate DTW's effectiveness, we compare 40

distinct parameter settings in Epstein's ABM of civil violence (Epstein 2002; Wilensky 2004).

The primary

distinction is the initial cop density which ranges from 4.05 and 5.00

in increments of 0.05% and results in varied sizes of the population of

quiet citizens, our outcome measure of interest here. We obtain 10 TS

samples (i.e., model runs) under NetLogo each having a duration of 200

ticks/time points. In Figure 10,

we present four individual runs in order to highlight the difficulty of

direct visual comparison.

Figure 10. Comparing Time Series from Distinct Parameterizations of Quiet Citizens of Civil Violence ABM - 4.10

- While the outcomes are initially similar, they rapidly

diverge sharing only the characteristic of the outcomes' exhibiting

some fluctuation. The two runs under the same parameter setting (4.1%)

naturally diverge due to distinct random sequences (from a RNG using

distinct random seeds).

- 4.11

- Comparisons of all pairs of the experimental conditions are

presented in Figure 11. These

comparisons are performed on normalized outcomes averaged over the

suite of runs. The distance measurements in the left matrix are direct

correlations and Euclidean distances in the right matrix. The cells in

the upper triangle of each matrix correspond to direct comparison of

the TS while those in the lower triangle indicates the measurements

under DTW. The row and columns denote increasing cop densities.

Figure 11. Comparing DTW against non-DTW for correlation and Euclidean distance - 4.12

- The figures illustrate the superiority of DTW in capturing

TS differences under these measurements, over the direct use of exact

temporal pairs. DTW clearly exposes greater similarity of the outcomes'

TS when the experimental parameters (cop density) are also similar

whereas the direct measurements do not. This relationship is largely

monotonic as one would expect. Thus, DTW is appropriate for models for

which the outcome TS's structure (specifically, both its seasonal and

irregular periodicities) is greatly affected by the experimental

conditions.

- 4.13

- Finally, TS analysis provides a highly informative

opportunity to precisely estimate the impact of changes in the input

variables on the outputs of an ABM model. Techniques such as panel

data (or longitudinal)

analysis, which take into account both TS and cross sectional

components of the data, can enable the agent-based modellers to uncover

robust evidence on how model behaviours are associated with the changes

in the variables of the agents and/or the model over time. Other

prominent components of TS analysis (such as forecasting,

classification and clustering,

impulse response function, structural

break analysis, lag analysis,

and segmentation) may also be

used along with estimation and auto-regressive methods in order to

provide a better understanding of series generated by ABMs.

Processing spatial ABM output: approaches and visualization techniques

- 4.14

- The spatial environment in ABMs vary from cellular grids

(in which only inter-agent distance matter) to raster or vector

representations of multiple layers of a rich GIS data. Locations in a

spatial ABM may relate to output metrics at the individual or

aggregated agent level (e.g., income, opinion, or a strategy) or other

spatial qualities (e.g., land-use categories). It is challenging to

seek patterns and to compare across experimental conditions

in a search for a compelling narrative while screening through hundreds

of maps produced by an ABM. Spatial ABM analysis is often informed by

methods from geography and spatial statistics/econometrics. Here, we

review some spatial metrics and visualization approaches for spatial

analysis.

- 4.15

- Quantitative indices such as the Kappa

index of agreement (KIA or Cohen's \(\kappa\)) have

been widely employed for cell-by-cell comparison of ABM outcomes on

spatial maps (Manson 2005).

More recent work suggest alternatives to the \(\kappa\) such as a moving

window algorithm (Kuhnert

et al. 2005) and expose its limitations (Pontius & Millones 2011).

Pontius (2002) proposes

more comprehensive methods and measures for tracking land use changes

and comparing maps under multiple resolutions (from coarse to fine).

- 4.16

- Various spatial metrics

are often used to measure land-use change and detect spatial patterns

such as fragmentation and sprawl (Parker

& Meretsky 2004; Torrens

2006; Liu & Feng 2012;

Sun et al. 2014). These

metrics include mere counting of land-use categories, landscape

shape index, fractal dimension, edge

density, as well as adjacency, contiguity,

and centrality indexes. Moran's I

is another spatial autocorrelation statistic indicating the extent of

dispersion or clustering (Wu 2002).

Millington et al. (2008)

used a contagion index along

with basic patch count metrics to identify fragmentation. Griffith et

al. (2010) used Getis-Ord

Gi* "hotspot" analysis to identify statistically significant

spatial clustering of high/low values while analysing the spatial

patterns of hominids' nesting sites. Zinck and Grimm (2008) used spatial indices (shape

index, edge index ) and basic metrics

(counts and areas of discrete "island" regions) to systematically

compare empirical data to simulation results from the classic

Drossel-Schwabl forest fire cellular automata ABM. Software offering

these metrics include C++ Windows-based 'FRAGSTATS' and the 'SDMTools'

package (for the platform-independent R), the functionality of which

may be augmented by Bio7 and ImageJ for image processing.

- 4.17

- In another example, Sun et al. (2014,

Table 6) reported basic statistics (i.e., mean and standard deviation)

on nine spatial metrics estimated over multiple parameter settings for

varying levels of land market representation in their urban ABM. The

significance of mean differences (across conditions) were assessed with

the Wilcoxon signed rank test.

Means of the metrics were jointly visualized across all parameter

settings in a line graph style called "comprehensive plotting" (Sun et al. 2014, Figures 5–8),

which then allows for identification of conditions producing outlying behaviour facilitating visual sensitivity analysis.

- 4.18

- Obviously, statistical models such as regressions and

ANOVAs may be employed to relate model parameters and outcomes to

spatial outcomes (e.g., Filatova

et al. 2011). However, dependencies among spatially

distributed variables require special treatment in the form of a

weighted matrix incorporated into a spatial

regression model as a predictor or as part of the

error term. Locales may be disambiguated further in statistical

prediction through geographically weighted regression

(GWR). These methods also fall under the auspices of spatial

econometrics.

Reporting and visualizing ABM results over time and space

- 4.19

- Typically, spatial ABM output is rendered as

two-dimensional maps. A collection of these can highlight model

dynamism/progression or allow for comparison of metrics and

experimental conditions (Parry

& Bithell 2012, Figure 14.11) or expose key,

informative trajectories (e.g., Barros

2012, Figure 28.3). 3D views are also used to portray outputs

particularly in evacuation and commuting (Patel

& Hudson-Smith 2012). Often, visual inspection of the

data offers face validation and high-level inference. For example, a spatial

overlay is commonly used to analyse raster outputs

and evaluate the spatial distribution of multiple model behaviours and

outcomes.

- 4.20

- The spatial distribution of outcomes is subject to the

stochastic and path dependent nature of ABMs and often demand

aggregation for effective presentation of model behaviour (Brown et al. 2005). Naturally, a

mean with a confidence interval for an outcome in each spatial location

can be sufficient for reporting a set of maps (over time or across

experimental conditions) (e.g., Tamene

et al. 2014). Alternatively, a frequency

map for a single parameter setting reveals each

location's state transition probabilities (as a proportion of total

simulation runs) (Brown et al. 2005).

These transitions may easily be portrayed as a colour-gradient

map (e.g., Magliocca

2012). Plantinga and Lewis (2014)

warn against constructing deterministic transition rules out of these

probabilities. Brown et al. (2005)

also suggest distinguishing areas of non-transition (or invariant

regions) from variant regions; the method for identifying these areas

is called the variant-invariant method.

- 4.21

- Judicious selection of a temporal sequence of maps can

reveal model dynamics. Software such as a "map comparison kit" (RIKS BV 2010) can perform

automated tests to identify the extent to which two raster maps are

different. Pontius et al. (2008)

extends the comparison exercise to include three maps while considering

pixel error and location error. Comparisons include all pairs of

simulation outputs at times 1 and 2, a "true", reference map at time 2,

and all three maps jointly.

- 4.22

- While showcasing a map as an output of an ABM is always

appealing, supplementing it with summary statistics of spatial patterns

allows for deeper understanding of experimental effects and

consequently the model's behaviour. In addition to the spatial metrics

discussed in 4.3, quantities of land use and conversions may be

reported as histograms across different scenarios and land-use types

over time (e.g., Figure 4 in Villamor et al. (2014)).

- 4.23

- Color-gradients in landscape visualizations can be used to

represent temporal changes in a metric of interest. Filatova (2014) employs these spatio-temporal

change gradients to present land price changes

(due to market trades in an ABM focusing on coastal properties) between

two points in time in an empirical landscape (Figure 12).

Figure 12. Changes in property prices over time - 4.24

- The blue and red gradients correspond to the valence of the

change (i.e., falling and rising prices, respectively); their darkness

or intensity indicate level of change. Such visualizations may offer

easy identification of clusters and boundaries.

- 4.25

- Another obvious way to depict the differences in spatially

distributed ABM output data is to visualize in 3D plots. The 3rd

dimension clearly permits the inclusion of additional information such

as time or one of agents' inner attributes. For example, Huang et al. (2013) employed 3D colored bar

plots (Figure 13) in which the

2D layout corresponds to physical location.

- 4.26

- Such 3D visualizations offer insights into the ABM's

dynamics or highlight vital structural differences across experiments.

For example, Dearden and Wilson (2012)

use a 3D surface to plot the macro metrics of interest of their retail

ABM as a function of different values of the two most critical

parameters affecting agents' choices. 3D surfaces over different

parameters spaces can also be compared with each other, as demonstrated

by Dearden and Wilson in their comparisons of activity within consumer

and retailer agent classes.

Figure 13. Price of land and sequence of transactions under various market conditions when agents have heterogeneous preferences for location. Left: Allocation of land on this market is only preference driven. Right: Land allocation happens through competitive bidding. The higher the bar, the higher the land price. colours denote the time when land was converted into urban use. Source: Huang et al. (2013) - 4.27

- In this example, the color adds a fourth dimension (time of

an event) to the conveyed information. Furthermore, functional shapes

are more easily discernible in 3D; in this case, the upper section of

Figure 13b might be fitted to

a paraboloid or a similar solid of revolution.

- 4.28

- Another efficient use of 3D visualization for ABMs with

geo-spatial elements is shown in Figure 14.

Malleson's model of burglary in the context of urban

renewal/regeneration comprises agents who travel to commit crime in an

urban landscape (Malleson et al.

2013).

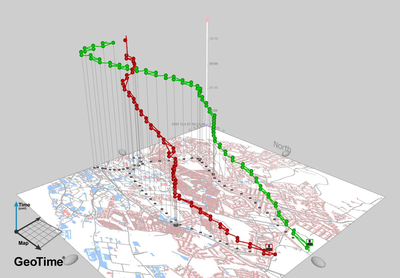

Figure 14. Spatio-temporal Trajectories of Burglars. Source: Nicolas Malleson. http://nickmalleson.co.uk/research - 4.29

- The colored, segmented lines in the figure when overlaid

onto the 2D city map depict the

trajectories of burglar agents seeking targets while the z-axis

denotes the temporal dimension to their journey.

This joint portrayal of time and space succinctly communicates

important features such as key locations of activity for the agents

(i.e., where lines appear vertical) as well as the origin of the agent

and its destination, in this case a presumably "safe" location.

- 4.30

- Finally, given the dynamic nature of ABMs, their spatial and temporal outputs are appropriate for dynamic presentation modalities. Interactive 3D visualizations offer the ability to examine the data from alternative perspectives. Increasingly, video (or animation) has been used to capture ABM behaviour as a means to communicate ABM output to both practitioners as well as the broader lay-audience.[6] Model behaviour may also be captured as animated GIF files, the small sizes of which facilitate their use in presentations and web pages (Lee & Carley 2004; Lee 2004).

Discussion

and conclusions

Discussion

and conclusions

- 5.1

- While ABM as a technique offers many exciting opportunities

to open research frontiers across a range of disciplines, there are a

number of issues that requires rigorous attention when dealing with ABM

output data. This paper highlights the outstanding complexities in the

ABM output data analysis and consolidates currently-used techniques to

tackle these challenges. In particular, we group them into 3 themes:

(i) Statistical issues related to defining the

number of appropriate runs and hypothesis testing,

(ii) Solution space exploration and sensitivity

analysis, and (iii) Processing

ABM output data over time and space. We also

briefly discuss stakeholder involvement in ABM

research (iv) below.

- 5.2

- Statistical issues related to defining a number of

runs and hypothesis testing: For analysing ABMs, the

calculation of statistics from model outcomes across multiple

simulation runs is required. However, the statistical methods are

challenged by both a plethora of ABM output data for which traditional

statistical tests will expose minute effects and complex ABMs for which

runs are costly. In the former scenario, statistical methods need to be

tempered (e.g., a more critical \(p\)-value) or an acceptable ceiling

on the number samples (or runs) should be enforced. For the latter

case, a predetermination of test sensitivity (e.g., effect size) must

be made before calculating a minimum number of runs. However, the

stability of outcome variance needs to be secured, and we demonstrate

and review approaches for estimating the point at which this is

achieved. We also reveal that the traditional approach to determining

minimum sample size is sensitive to the shape of the distribution and

we suggest empirical estimation of the power level or use of the more

conservative Wilcoxon rank sum test. Another challenge is the analysis

of many influencing variables. The analysis of complex

interdependencies within a simulation model can be addressed by

systematic design of experiment principles, and univariate analysis may

support the analysis by pre-defining parameter ranges. Overall, ABM

researchers should be aware of the statistical pitfalls in the analysis

of simulation models and of the methods described to address these

challenges.

- 5.3

- Solution space exploration and sensitivity analysis:

An ABM cannot be properly understood without exploring the range of

behaviours exhibited under different parameter settings and the

variation of model output measures stemming from both random and

parametric variation. Accordingly, it is important for ABM analysts and

researchers to be familiar with the range of methods and tools at their

disposal for exploring the solution space of a model, and for

determining how sensitive model outputs are to different input

variables. ABMs pose particular challenges for SA, due to the

nonlinearity of interactions, the non-normality of output

distributions, and the strength of higher-order effects and variable

interdependence. While some model analyses may find success using

simple/classic SA techniques, practitioners would do well to learn

about some of the newer and more sophisticated approaches that have

been (and are being) developed in an effort to better serve the ABM

community.

- 5.4

- Processing ABM output data over time and space:

While every ABM has the potential to produce high resolution panel data

on aggregated and agent-level metrics over long time periods, the

standard time series techniques are rarely applied. We argue that the

use of time series techniques such as decomposition and moving averages

analysis not only improve the scientific value of ABM results but also

help gaining valuable insights – e.g., the emergence of the two regimes

in the data over time – that are likely to be omitted otherwise. The

use of Euclidean distance similarity measurement and dynamic time

warping offers high utility especially when temporally varying outputs

need to be compared between experiments or in a sensitivity analysis

exercise. When dealing with spatial data analysis, ABM researchers

actively use methods developed in geography such as spatial indexes,

map comparison techniques, series of 2D or 3D maps, 2D histograms, and

videos. In addition, ABM-specific methods are being actively proposed –

such as 3D histograms reflecting temporal changes over a spatial

landscape, spatio-temporal output variable change gradients, as well as

overlaying temporal ABM dynamics over a 2D map.

- 5.5

- Communicating ABM results to stakeholders:

The utility and effectiveness of many ABMs and their outputs are often

judged by the model's consumers: the user, the stakeholder, or

decision-maker. Thus, a qualitative understanding of the model is

essential as model acceptance and adoption depend strongly on

subjective, qualitative considerations (Bennett

et al. 2013).

- 5.6

- The clarity and transparency of ABM mechanisms facilitate

stakeholder involvement in the modelling process. This participatory

modelling is a powerful strategy to facilitate decision-making,

management, and consensus building (Voinov

& Bousquet 2010).

In contrast to other techniques

such as System Dynamics (SD), Computational General Equilibrium, or

Integrated Assessment Modelling, ABM rules are explicit, directly

embedded in the model, and do not necessarily have to be aggregated or

proxied by obscure equations. Thus, ABMs have been historically at the

forefront of participatory modelling. Communicative graphical user

interfaces (GUIs) in platforms such as NetLogo and Cormas have also

contributed to the clarity in presentation and ease of interpretation

(as they have done for SD's Stella or Vensim). A clone of participatory

modelling with ABMs conceived by French modellers became known as companion modelling (Bousquet et

al. 1999; Barreteau et

al. 2003; Étienne 2014)

and is applied globally particularly in developing nations (Becu et al. 2003; Campo et al. 2010; Worrapimphong et al. 2010).

As the focus is on the model as a process rather than a product (Voinov & Bousquet 2010),

co-learning between stakeholders and modellers results in expedient

cycles of modelling, output presentation and discussion, and subsequent

amendment of the model.

- 5.7

- Moss (2008)

notes that evidence should precede theory, whenever modelling becomes

embedded in a stakeholder process. Thus, interpretation of model

outputs requires more than mere quantitative evaluation and

interpretation, and the necessary task of weighing model outputs

against values and perceptions of both stakeholders and modellers alike

continues to challenge us (Voinov et

al. 2014).

- 5.8

- Directions for future research: Most of the challenges and techniques considered in this paper are quite computationally intensive. Yet, the fact that an analysis of ABM output data often requires more time and attention than the design and coding of an ABM itself is still largely underestimated especially by amateurs in the ABM field. Thus, user-friendly software products that support design of experiments (e.g., the AlgDesign package in R (Wheeler 2011)),[7] parameter space exploration, sensitivity analysis, temporal and spatial data exploration are in high demand. For example, the MEME software is one step toward this goal and is a valuable tools for ABM researchers. ABMs would ideally support real world decision-making, hence efficient, user-friendly ABM platforms, supporting data analysis and visualization, would reinforce use of ABM in participatory modelling. Moreover, insights into advanced statistical techniques could assist in resolving some of the statistical issues discussed in Section 2.

Acknowledgements

Acknowledgements

- This material is based upon work supported by NWO DID MIRACLE (640-006-012), NWO VENI grant (451-11-033), and EU FP7 COMPLEX (308601). Furthermore, our thanks go to all participants of Workshop G2 during the iEMSs 2014 Conference in San Diego.

Notes

Notes

-

1This

paper was inspired by a workshop at the 2014 iEMSs conference: the G2

workshop "Analyzing and Synthesizing Results from Complex

Socio-ecosystem Models with High-dimensional Input, Parameter, and

Output Spaces". During that workshop, several key issues facing today's

simulation modellers were identified and discussed. The ones deemed to

be more exigent have become the focus of this paper.

2The normal or Gaussian distribution also known as the "bell-curve" is the most easily recognized empirical distribution containing a single mode and often captures many naturally observed outcomes. The uniform distribution is a flat, artificial distribution and can be considered to serve as the control distribution among this set. The exponential is often used to model failure rates and is amodal and skewed. The Poisson expresses the probability of a given number events occurring within a known interval. The \(\chi^2\) (chi-squared) is typically employed in statistical tests as well as the Student's t distribution. We include these two as they are readily recognizable by many practitioners of applied statistics.

3The effect size calculation we employ is Cohen's \(d\) (Cohen 1988): \(d = \frac{\mu_1 - \mu_2}{s_{\rm pooled}}\).

4LeBaron et al. (1999, p. 1512), for example, note that their artificial stock market has time series capturing phenomena observed in real markets, including weak forecastability and volatility persistence.

$$ \mathrm{Euclidean~distance} = \sqrt{\sum^{n}_{i=1} (x_i - y_i)^2} $$ where \(n\) is the number of data points in each vector.

6For examples, we cite Epstein & Axtell (1996) and the corresponding video: https://www.youtube.com/watch?v=SAXWoRcT4NM and Helbing et al. (2005) and the corresponding video: https://www.youtube.com/watch?v=yW33pPius8E.

7Further options in R for DOE may be found at http://cran.r-project.org/web/views/ExperimentalDesign.html.

Appendix

A: Minimum Sample Size for Distributions

Appendix

A: Minimum Sample Size for Distributions

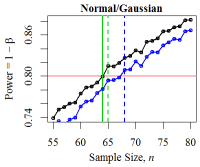

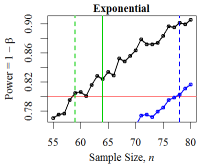

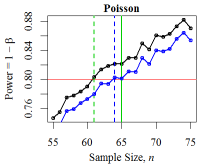

-

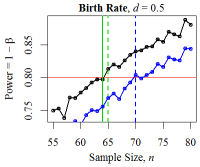

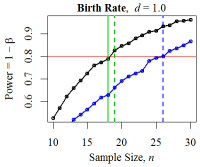

Figure 15. Minimum Sample Size for Three Distributions The black curve denotes the empirical power level. The red line denotes the desired power level: \(1 - \beta = 0.80\). The solid green vertical line denotes the minimum sample size derived from the power calculation while the dotted green line shows the empirically-derived size. The blue curve and line denote the power and minimum size according to the two sample Mann-Whitney-Wilcoxon test.

Figure 16. Minimum Sample Sizes for Birth Rate ABM The red line denotes the desired power level: \(1 - \beta = 0.80\). The solid green vertical line denotes the minimum sample size from the power calculation; the dotted green line shows the empirically- derived size; and the blue curve and vertical line denote the power and minimum sample size according to the Mann-Whitney-Wilcoxon test.

Appendix

B: Issues of Hypothesis Testing

Appendix

B: Issues of Hypothesis Testing